YFinal Word Before The Competition

Preparation for the PiWars was really fun. Previous competition's anxiety - will we manage to do anything or not didn't affected us this time. It was more - can we prepare to do ALL challenged to the best of our abilities or not.

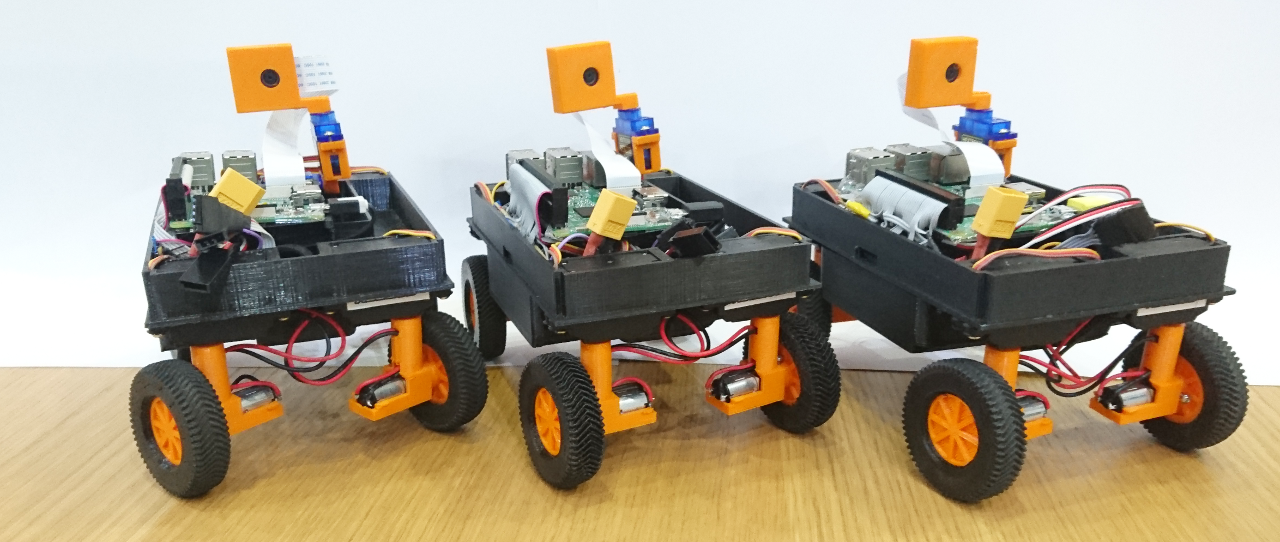

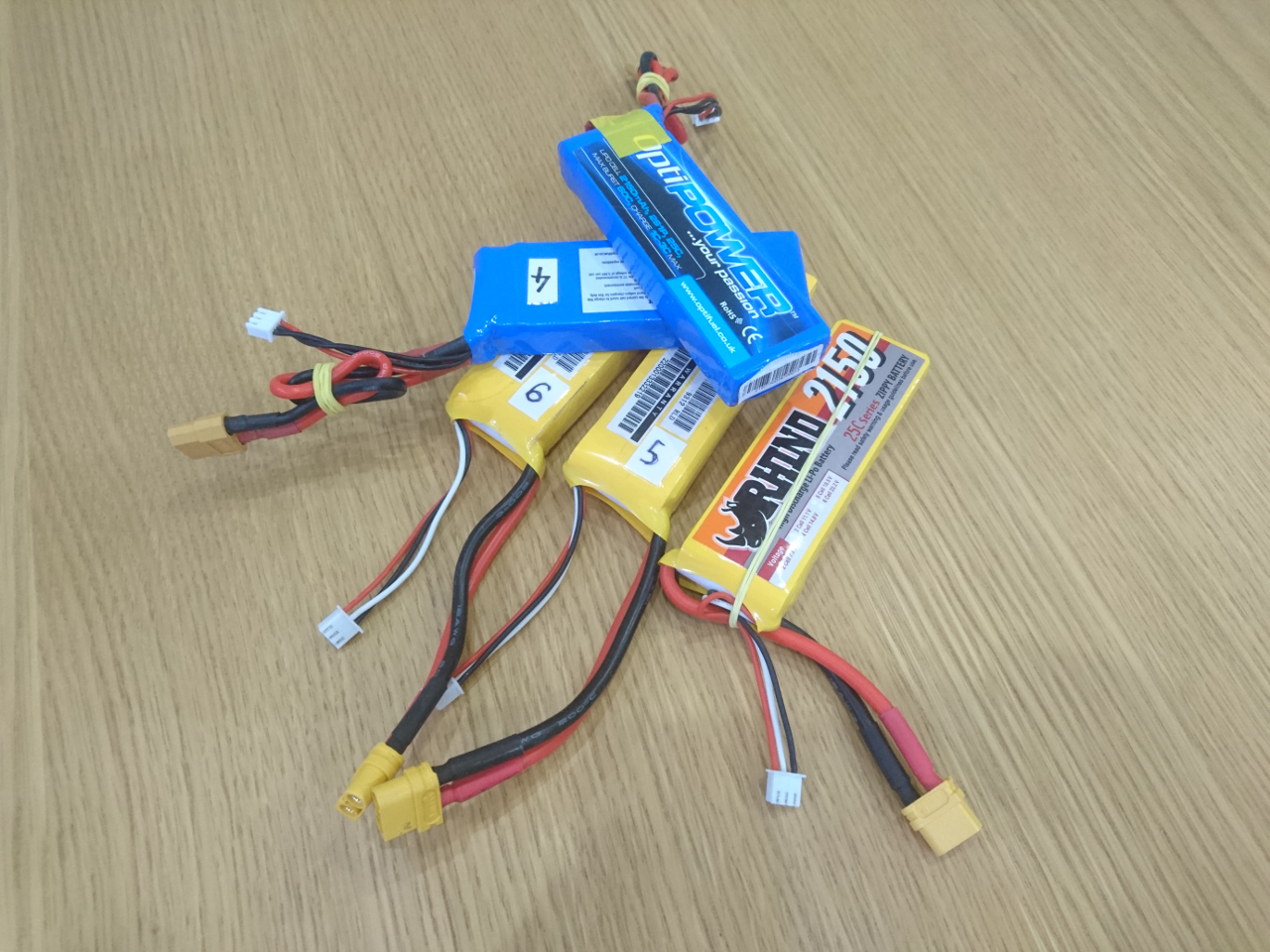

Rovers

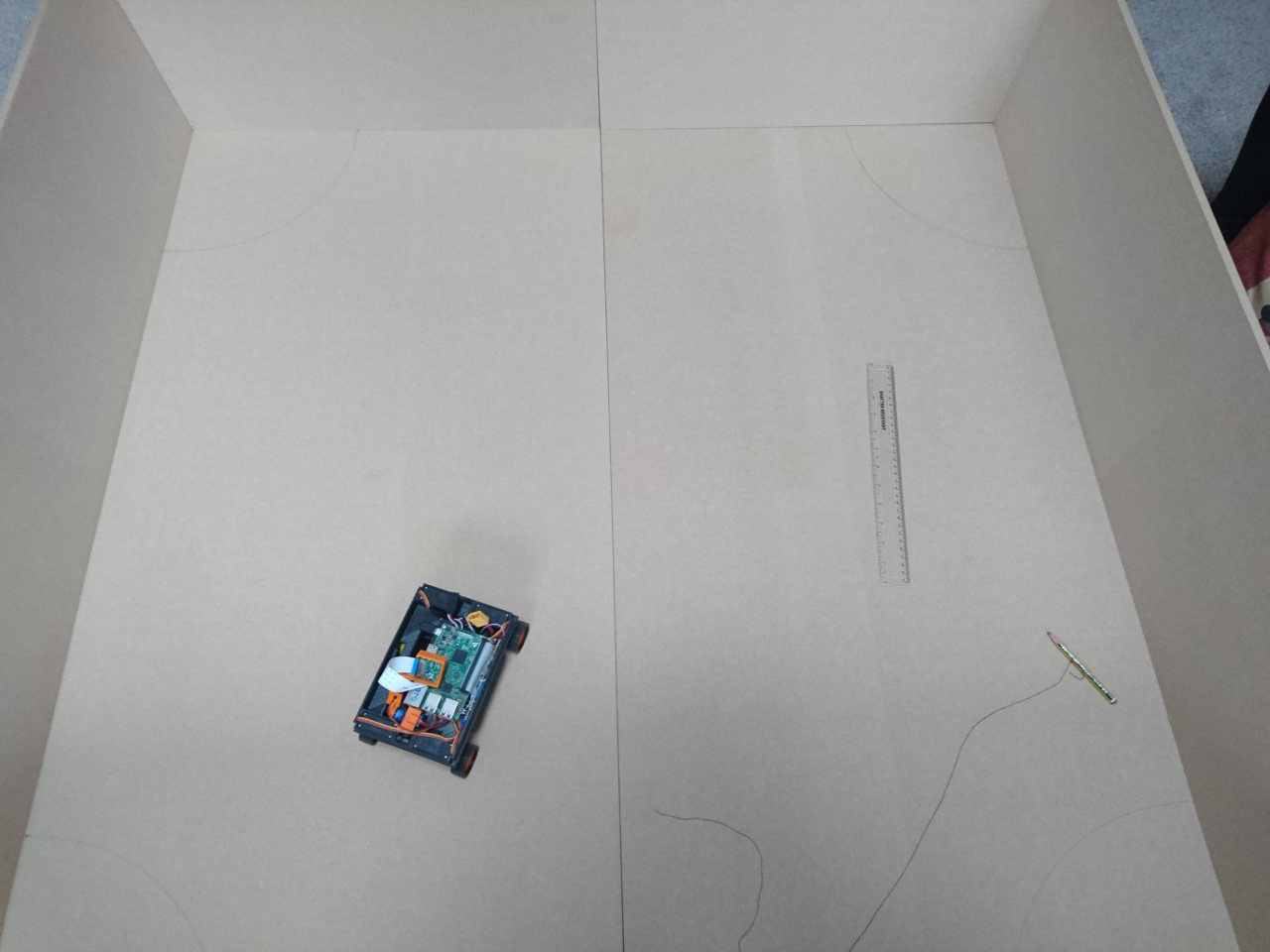

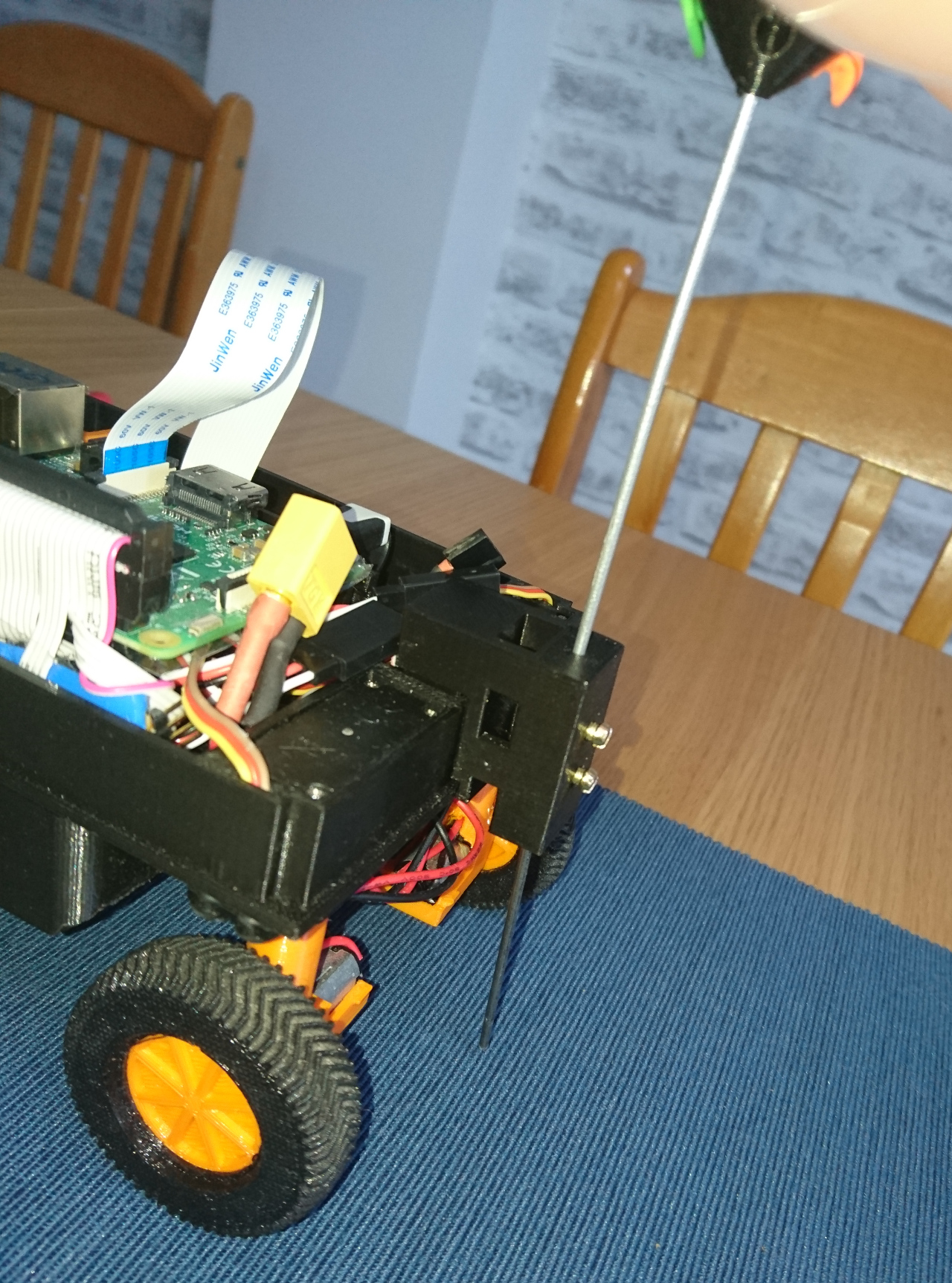

Rovers themselves turned to be really great source of fun and idea of having three for the club worked quite well. That magic number allowed us to have always one spare, one with better motors, one with the latest software (before it was replicated to the others), one with bigger wheels, one we like the best, one that always got one wire to the motor unsoldered somehow, one that had connector wires somehow shorter and harder to use than others, one with broken motor, etc...

At one point only one SD card was really working and then cloned three times. After all, for SF fans: "The Ramans do everything in threes"

Also, it will, hopefully, give us that kind of redundancy we didn't have last time. Last time we had 'preferred' rover (that worked well), one that might be used as replacement, and the 'old' one (with hardware and software lagging). This time all three are up the to job.

Software

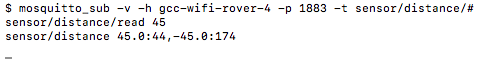

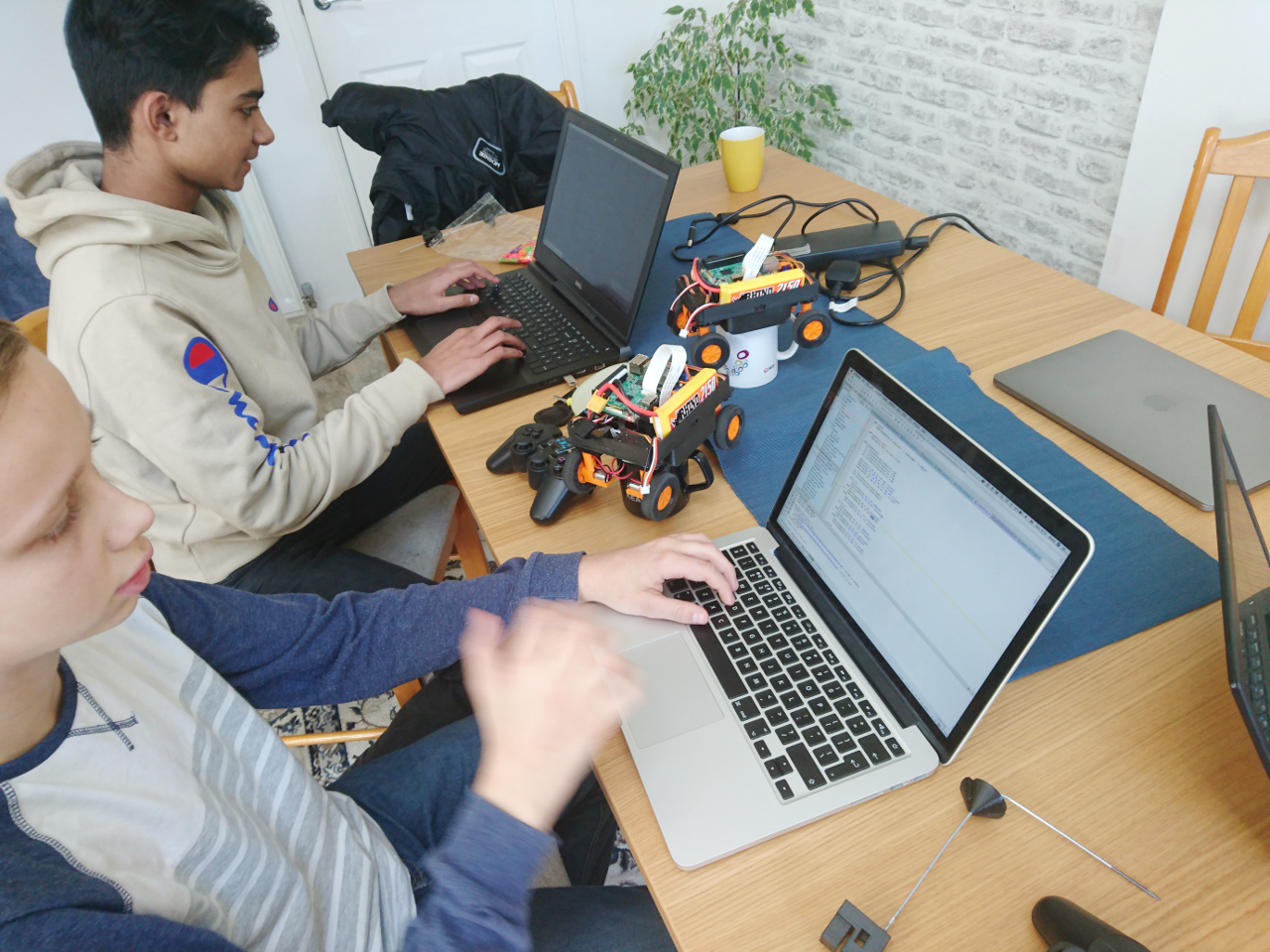

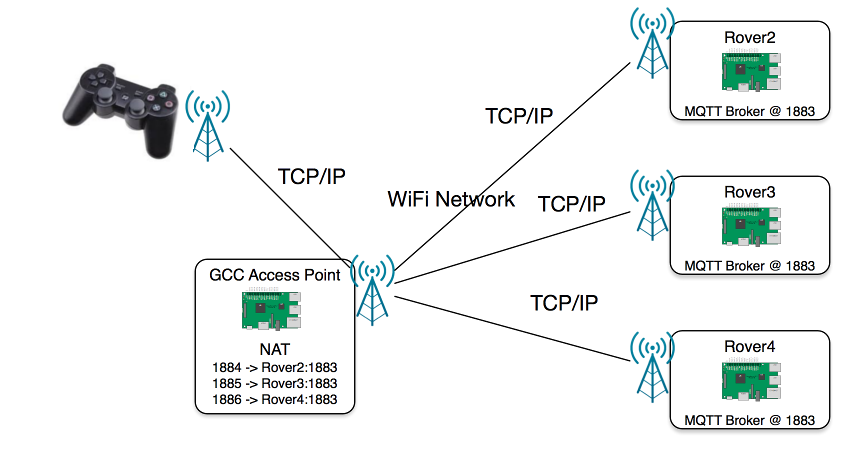

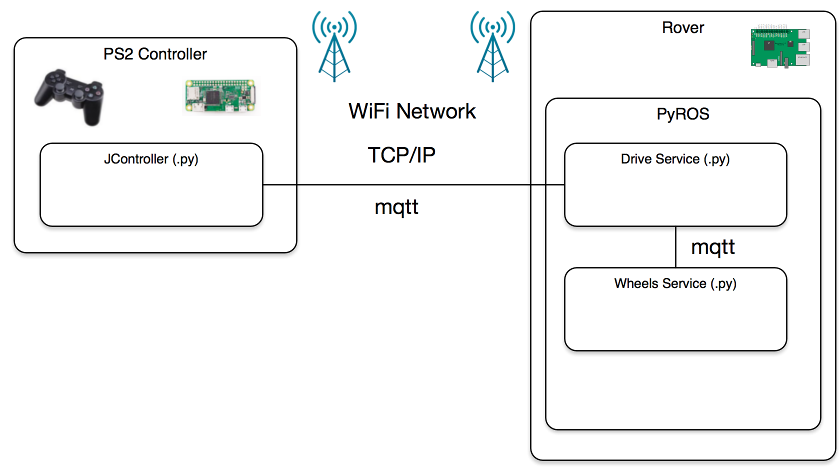

Pyros worked. Worked well in well locally and in school environment. We were able to use and code for rovers from Idle (basic Python IDE) on school computers as well as Unix based OSes (Linux/OSX). There were some issues with message throughput - bugs that were fixed as we have gone along. That particular bug was about limiting number of messages that can be serviced in one iteration of agent's or service's loop. Limit still exists but at least is much higher and we can consider it as 'known problem with a workaround'. And it allowed us to streamline and optimise some other aspects (sending one message for all wheels' speed and orientation instead of 4 for speed and 4 for orientation 50 times a second).It matured as we progressed through preparation for the PiWars and now we can set up WiFi details through Pyros, read messaging statistic, read storage and the fanciest newcomer - auto discovery rovers using broadcast UDP packets. All our client programs now autodetect existing rovers on the network. How many teams have their rovers discovered like that?

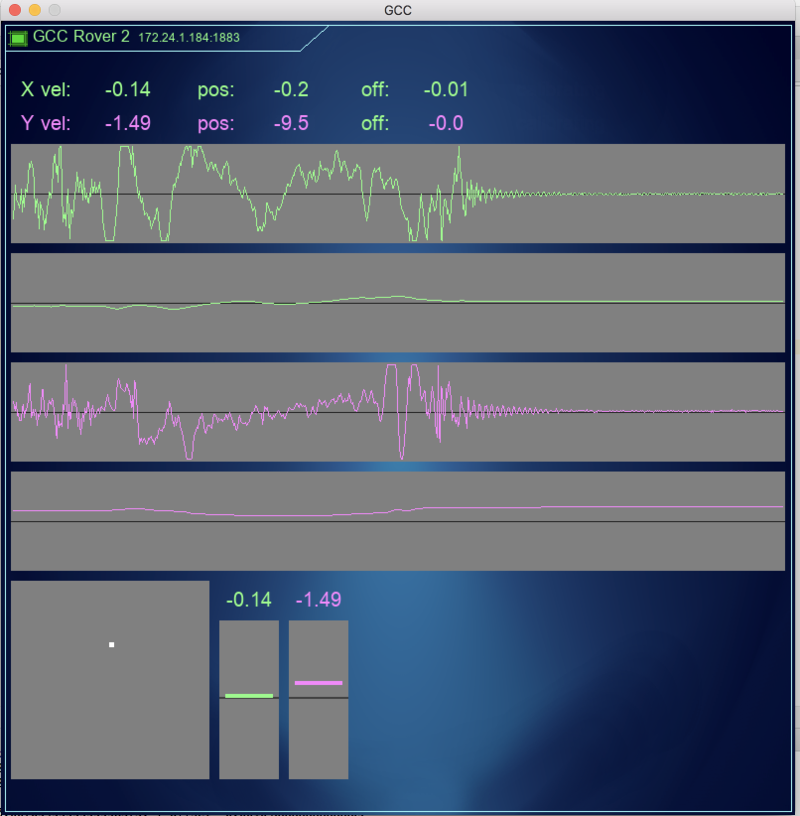

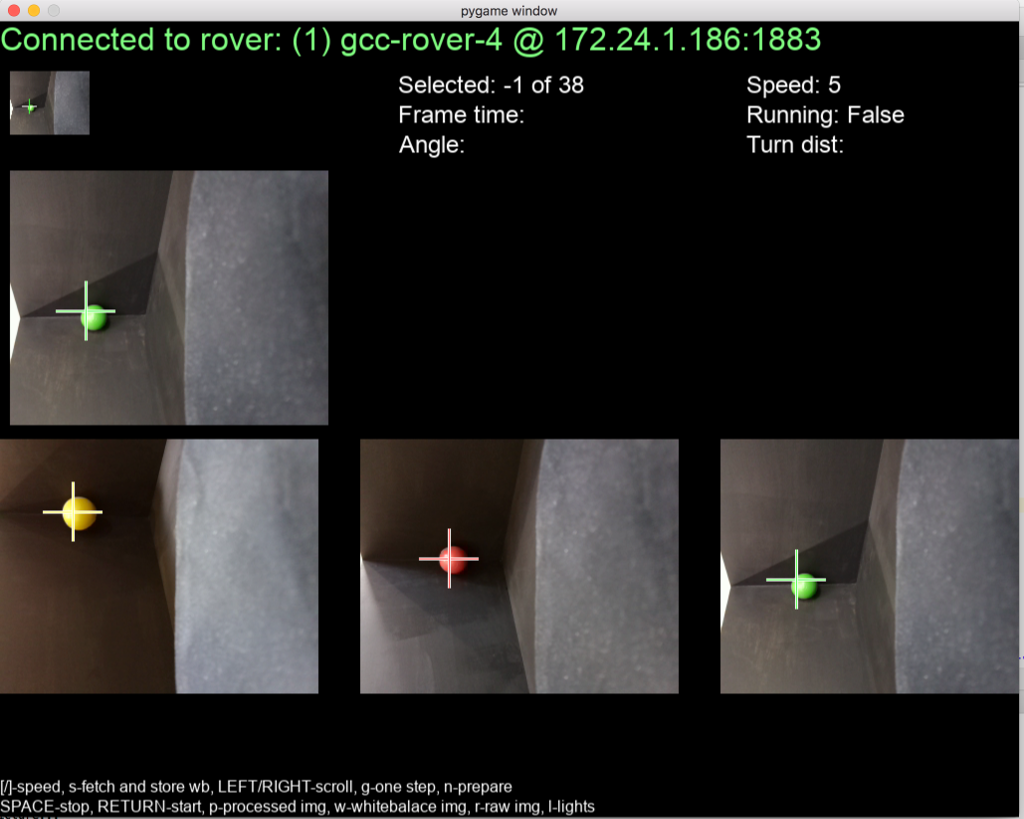

Speaking of client software - a few 'distraction' weekends gave us a new look for our client apps:

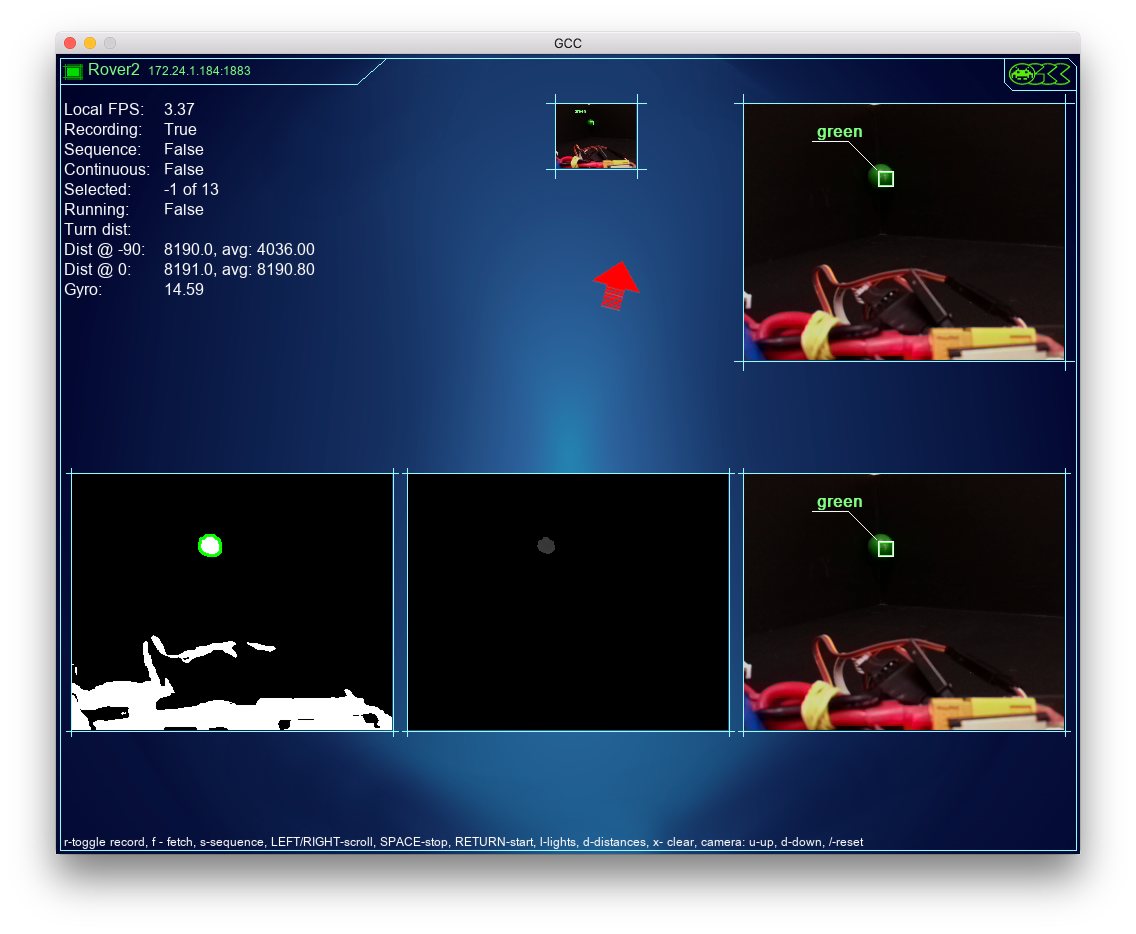

Or blue background and flashy logo in right corner:

But the most interesting was OpenCV. Shame we didn't start sooner with it. It is completely new area of hours of fun with computer vision and image processing. At first it seemed quite scary and complex, but splitting image to HSV components and analysing them separately, finding contours, finding contours' properties as area and diameter, applying them as masks to hue channel and doing histograms, drawing 'debug' pictures and shapes - all of it on its own was worth going to PiWars.

Also, it is worth mentioning that we managed some of the stuff we failed to implement last time. For instance - making lunge attack and orbiting around opponent.

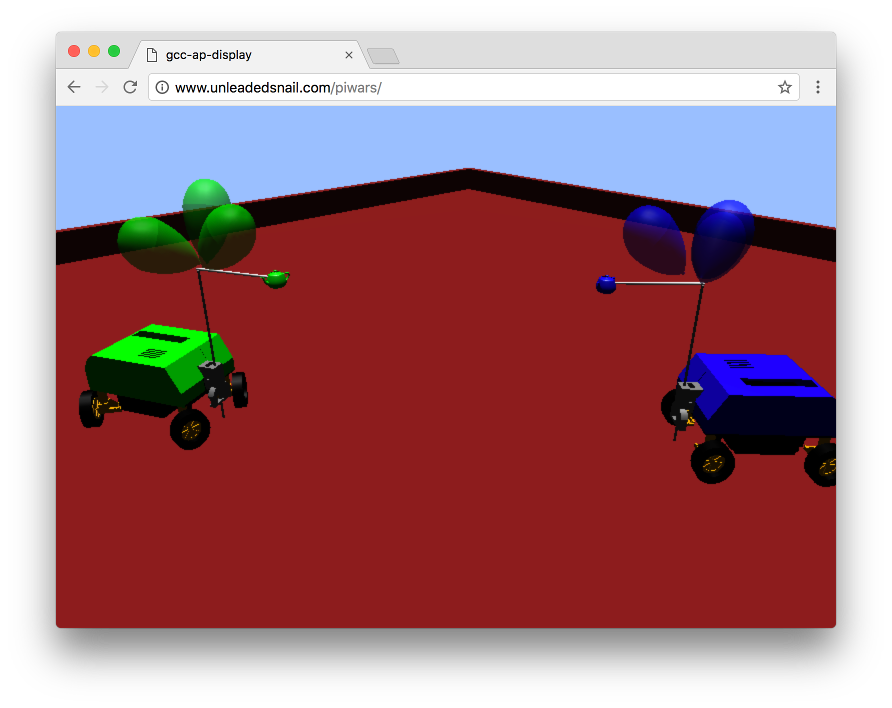

When talking of distractions - this was the something completely different and yet PiWars related. Our club member David did online PiNoon interactive game!

Given more time I am sure it will grow to be properly online so people can battle one against another (not only on the same computer as it is currently).

Hardware

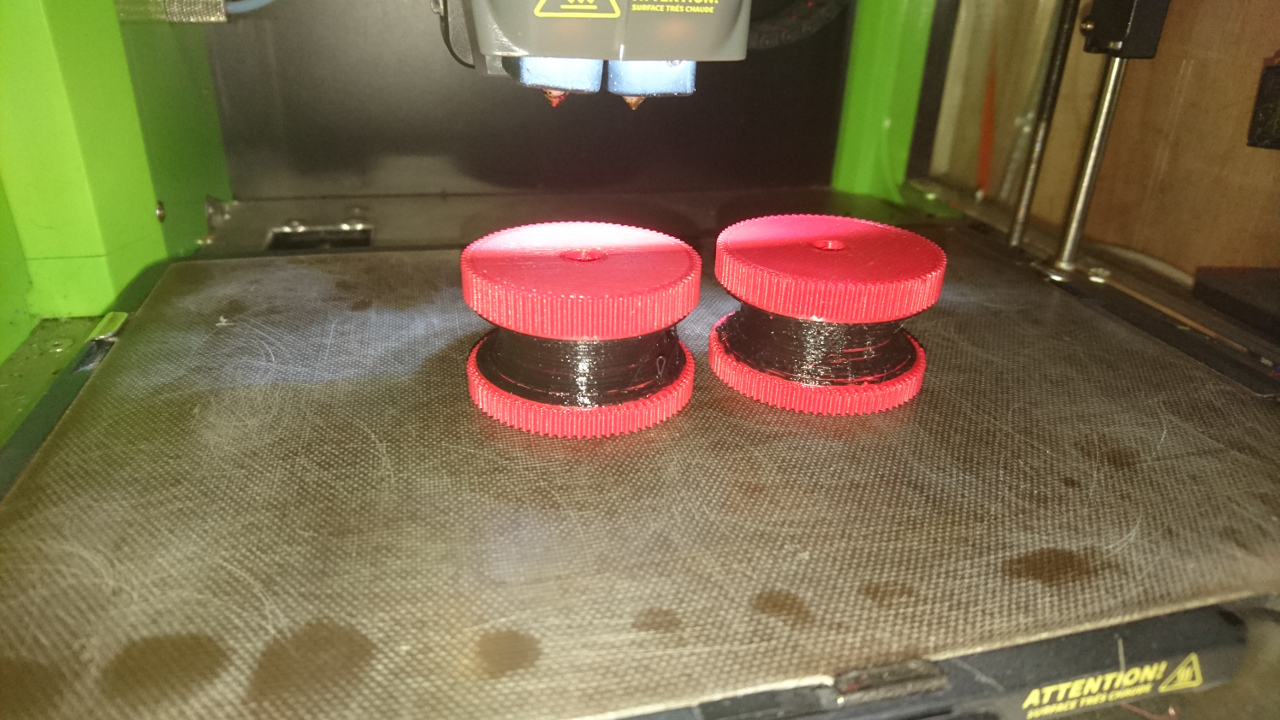

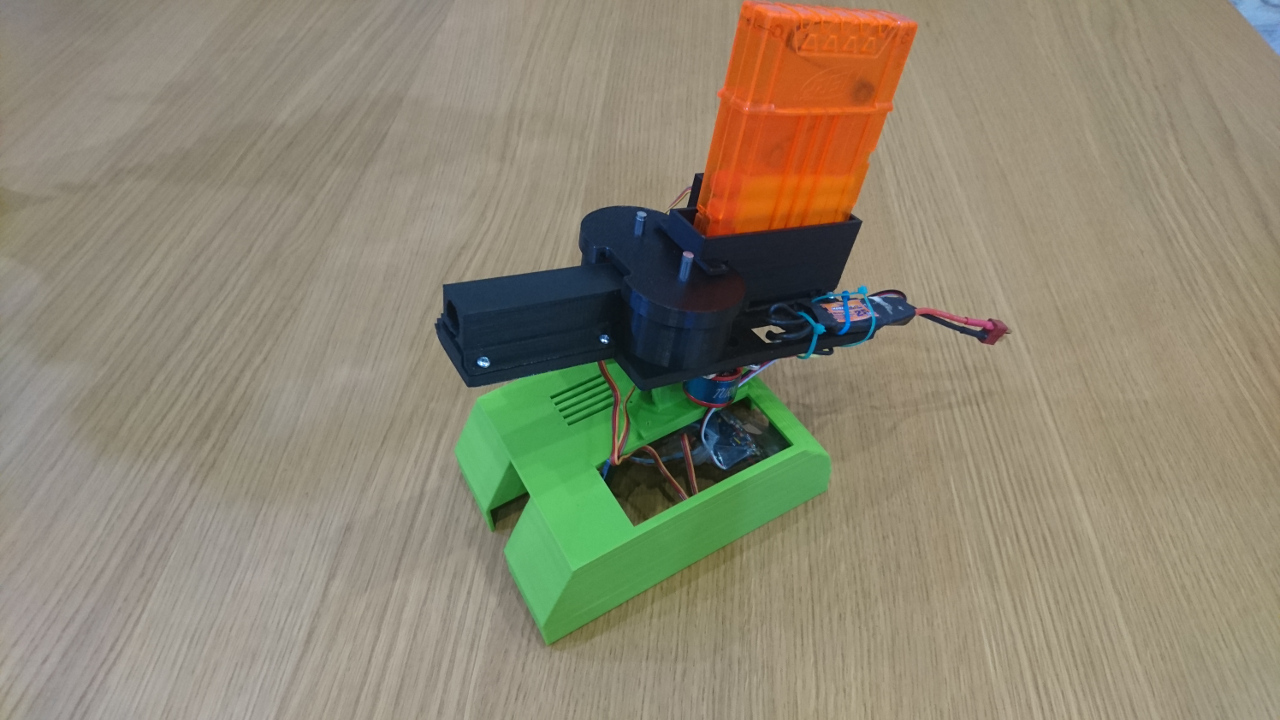

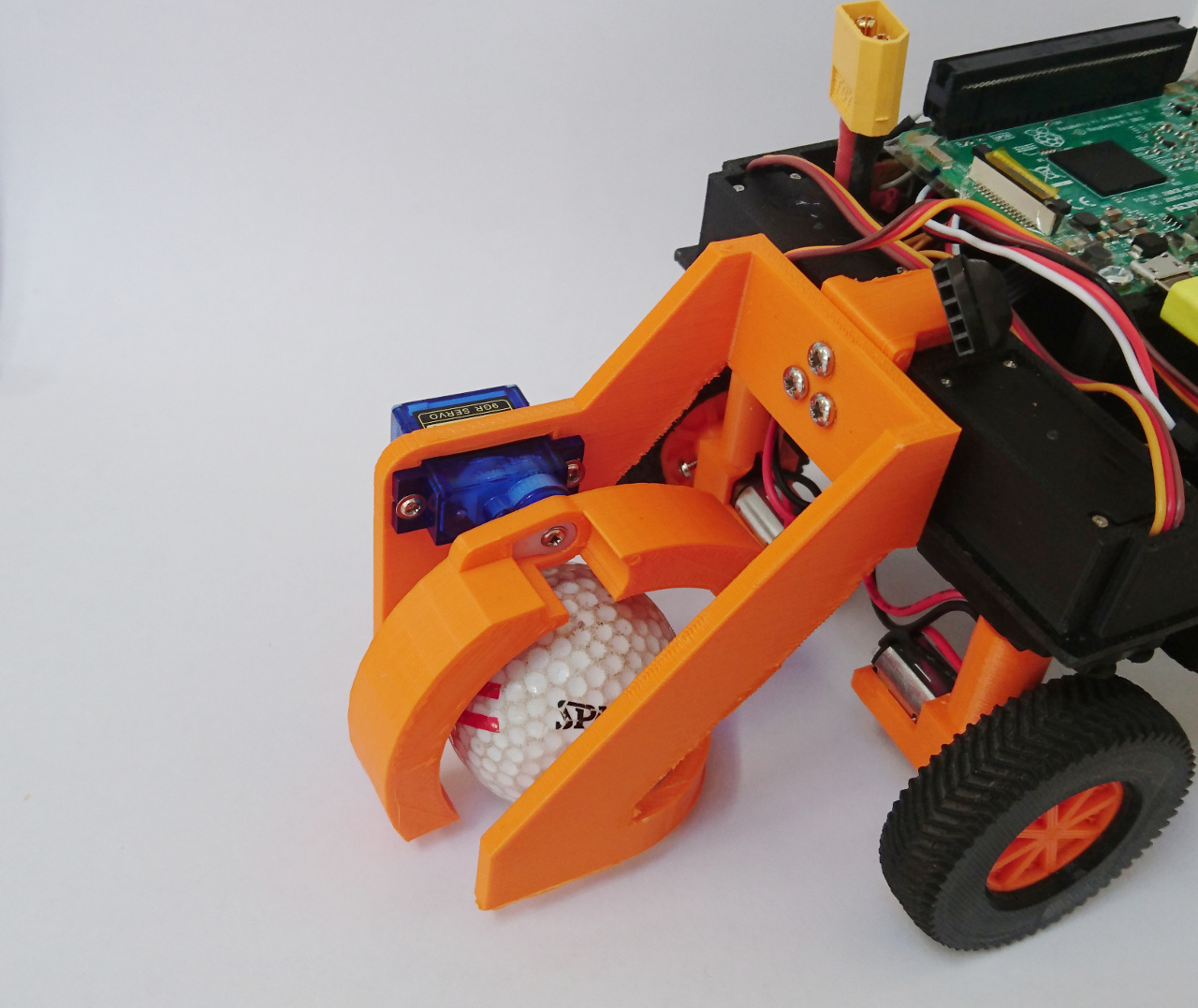

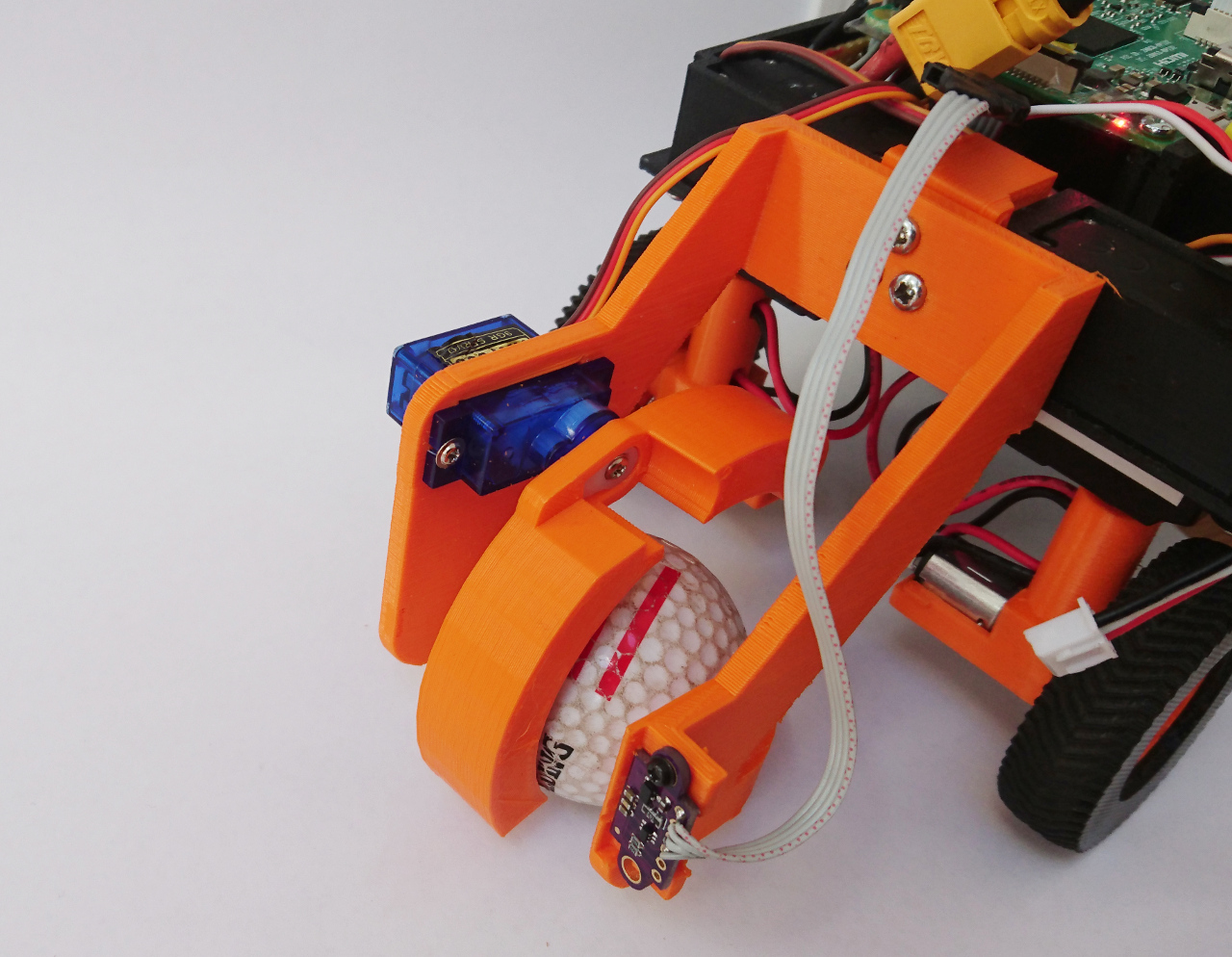

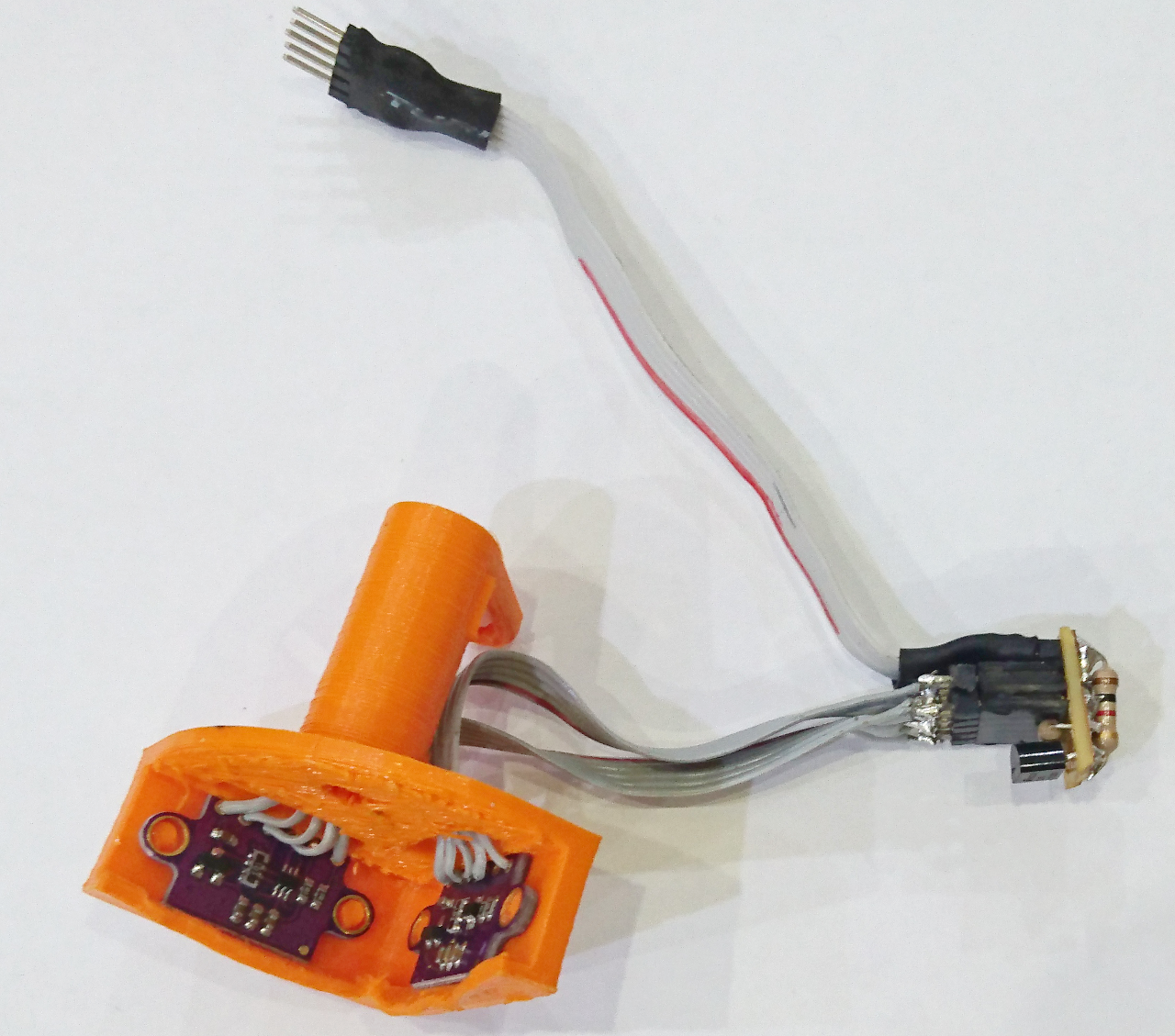

This time we didn't do as much as last time - our rovers were already built and ready (more or less). Making golf ball catcher and minor improvements to existing bits and pieces (PiNoon holder and VL53L0X sensors holder) were less exciting in comparison to the Nerf gun! It required engineering (and we again used Sketchup for our designs - luckily provided on the school computers, too). Also it was really nice seeing frowns on some student faces not understanding how two rotating cylinders can do the job - to broad smiles when we finally spun it up. I am sure the moment we go through The Duck Shoot challenge there'll be unapproved software tinkering to increase motor speeds - just for fun! Oh, and I hope we didn't leave a lot of mess behind us in the classrooms we used for our club.Same as for the previous PiWars we did lots of 3D printing, too. CEL's Robox 3D printer was put through the test. I am sure I did a few hundred of hours of printing for us. Last year dual material head developed problem which was, after all, quickly sorted out by CEL's engineer. So this time we had a spare head. And for a reason (same old head developed same old problem this time, too). Also, ability to print two materials next to each other (flexible ninja flex and PLA/ABS) helped some things...

Support And Sponsors

Same as last year, it is worth mentioning that our club had support from a few of companies:

Ve ctric originally sponsored one rover (it is still going!) and this time provided our team with T-shirts for the day. Also, we always new if we needed any CNC and/or 3D printing we would go to them!

ctric originally sponsored one rover (it is still going!) and this time provided our team with T-shirts for the day. Also, we always new if we needed any CNC and/or 3D printing we would go to them!

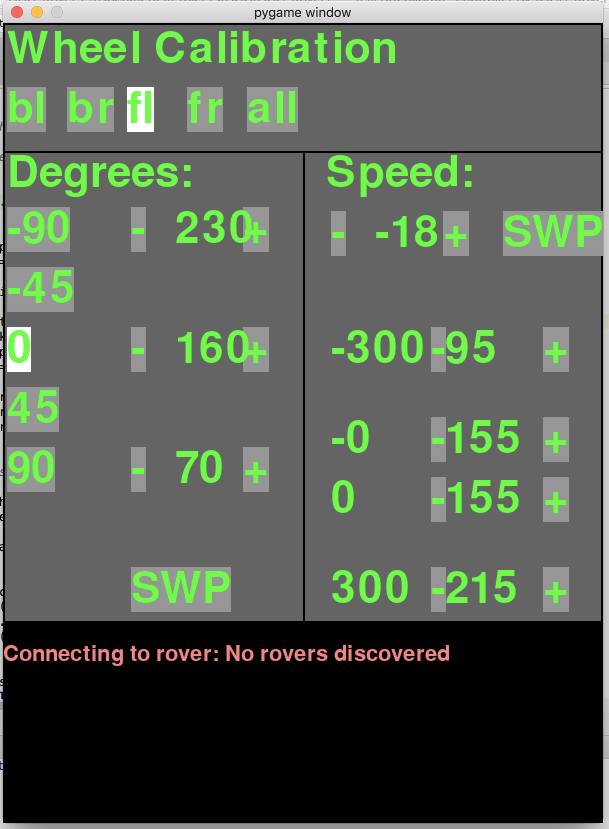

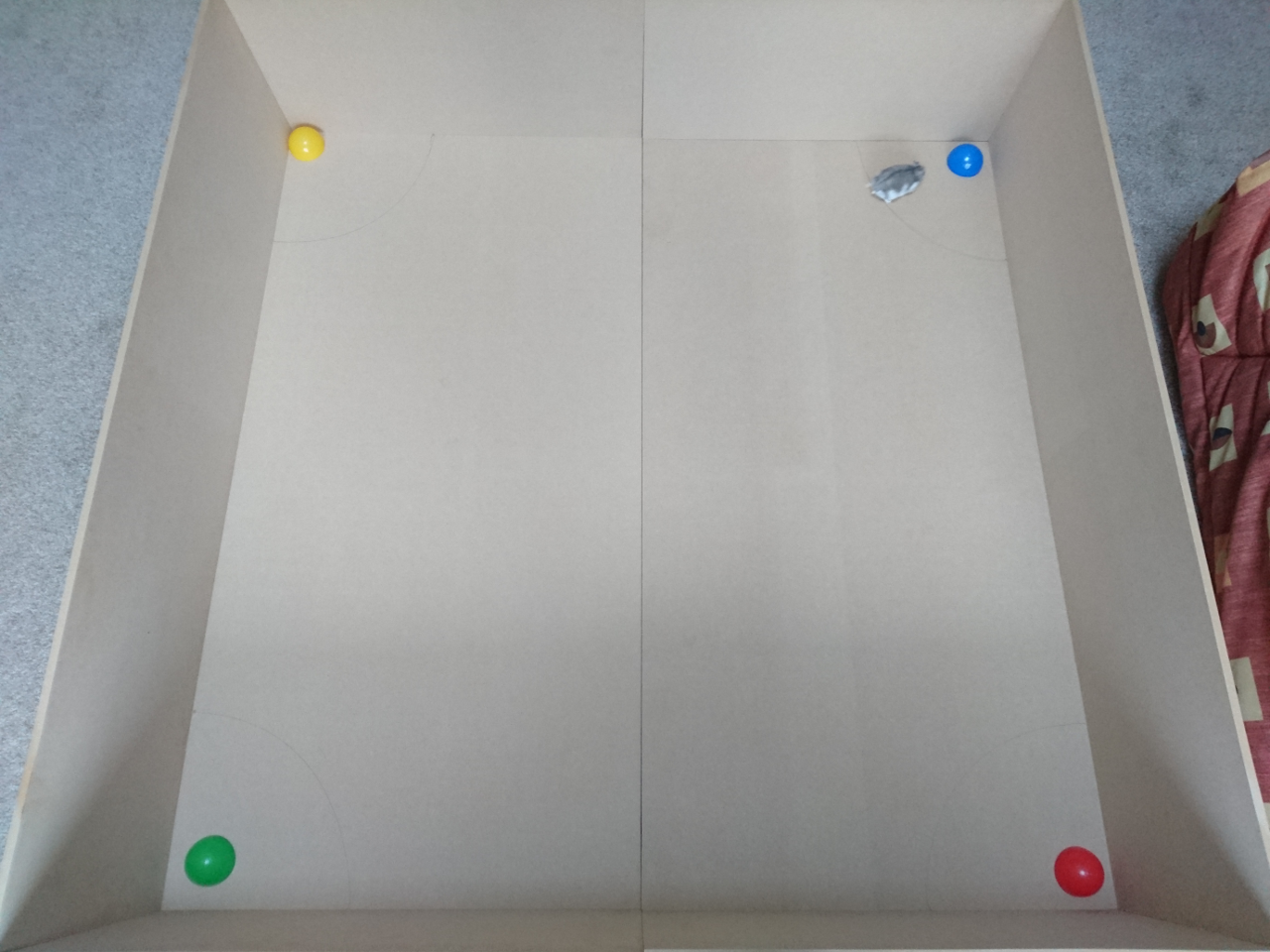

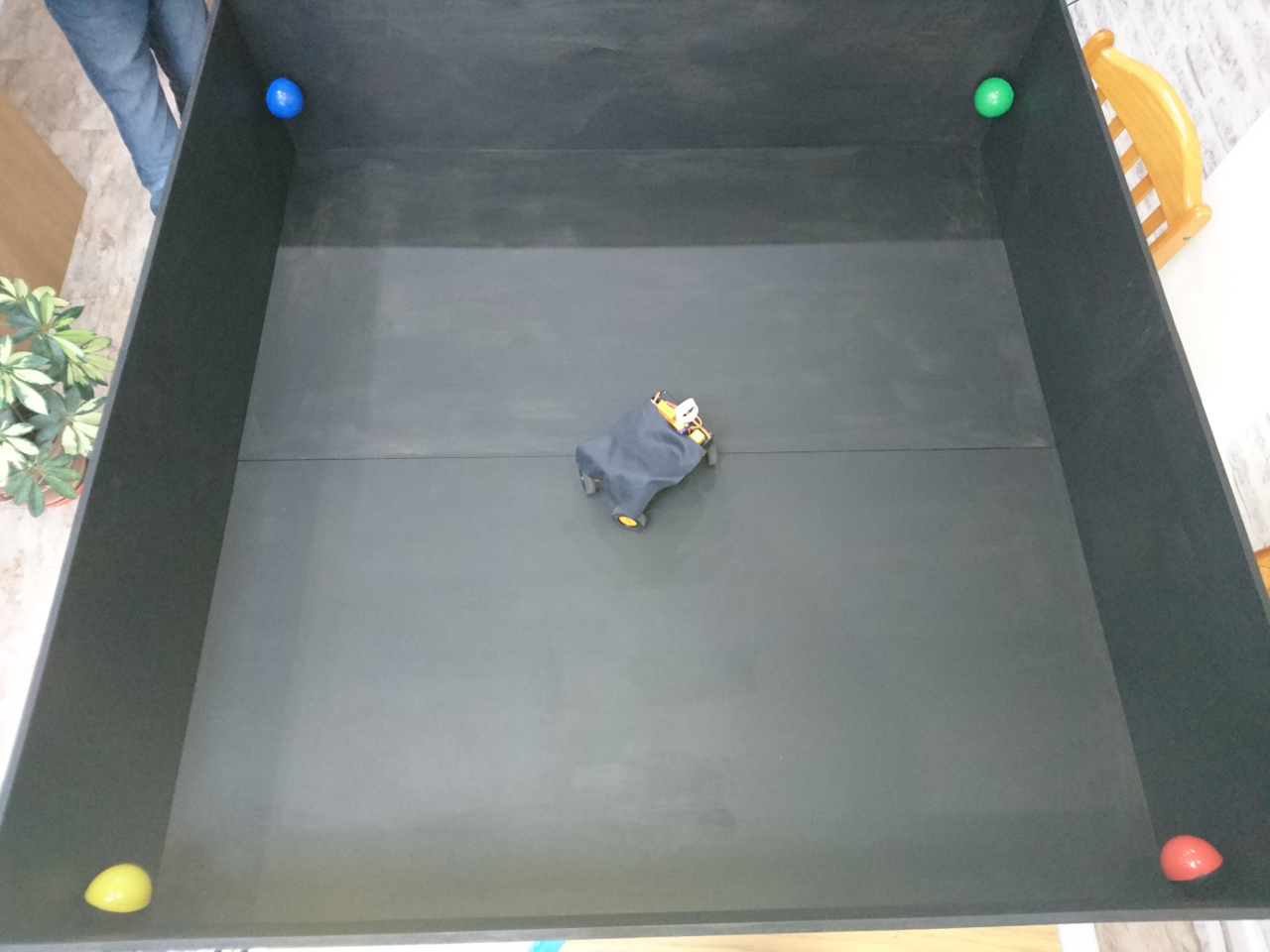

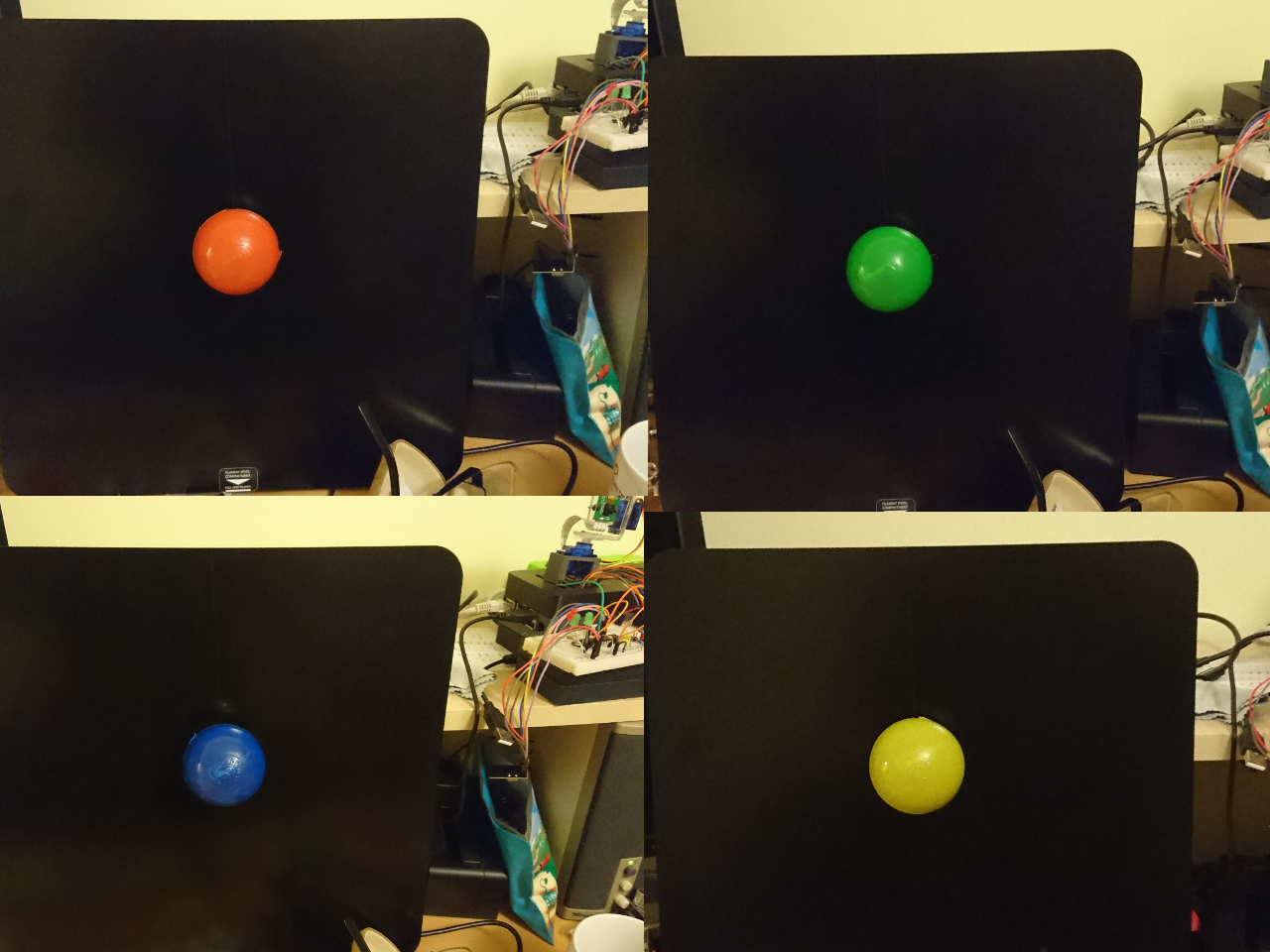

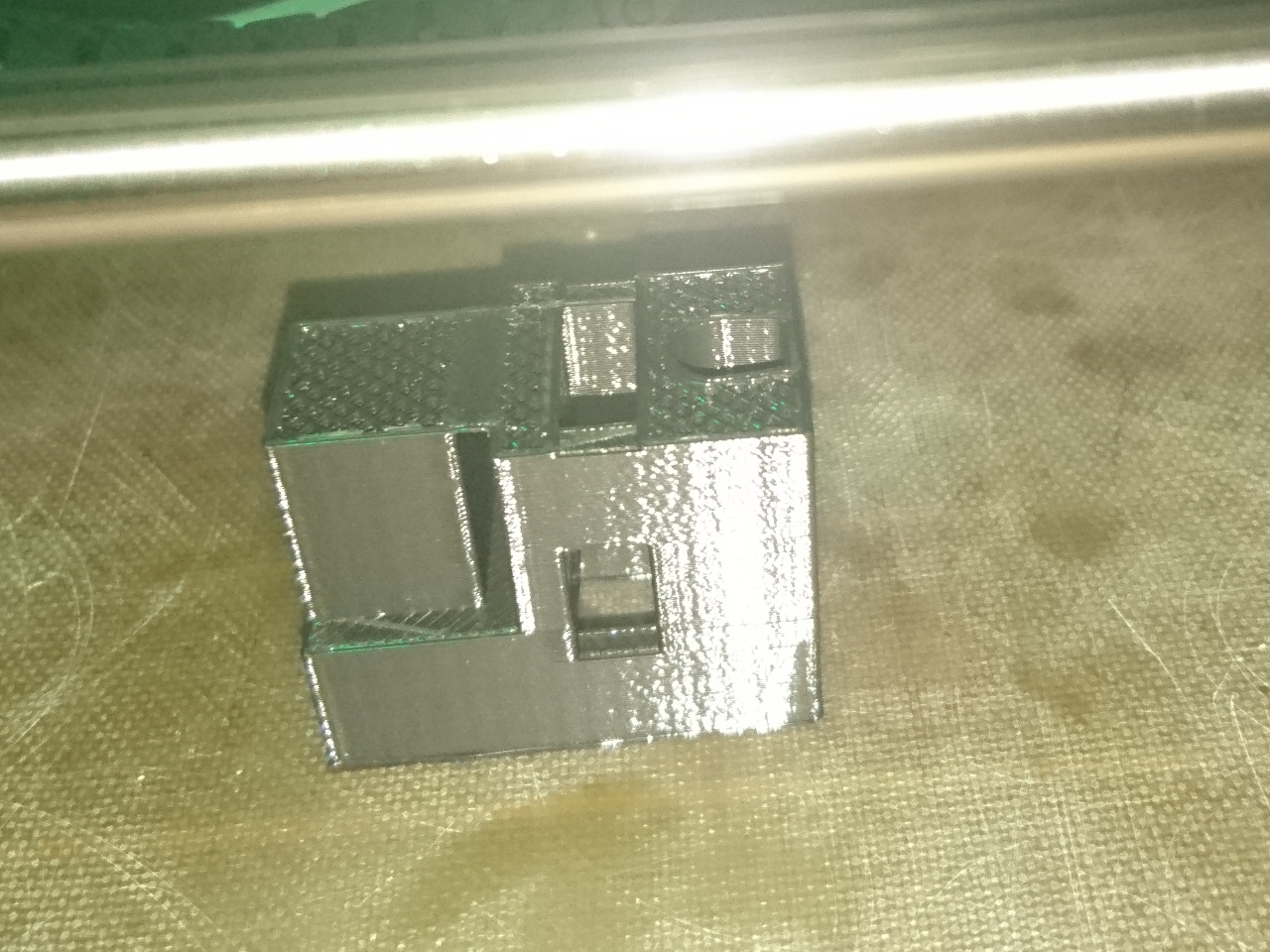

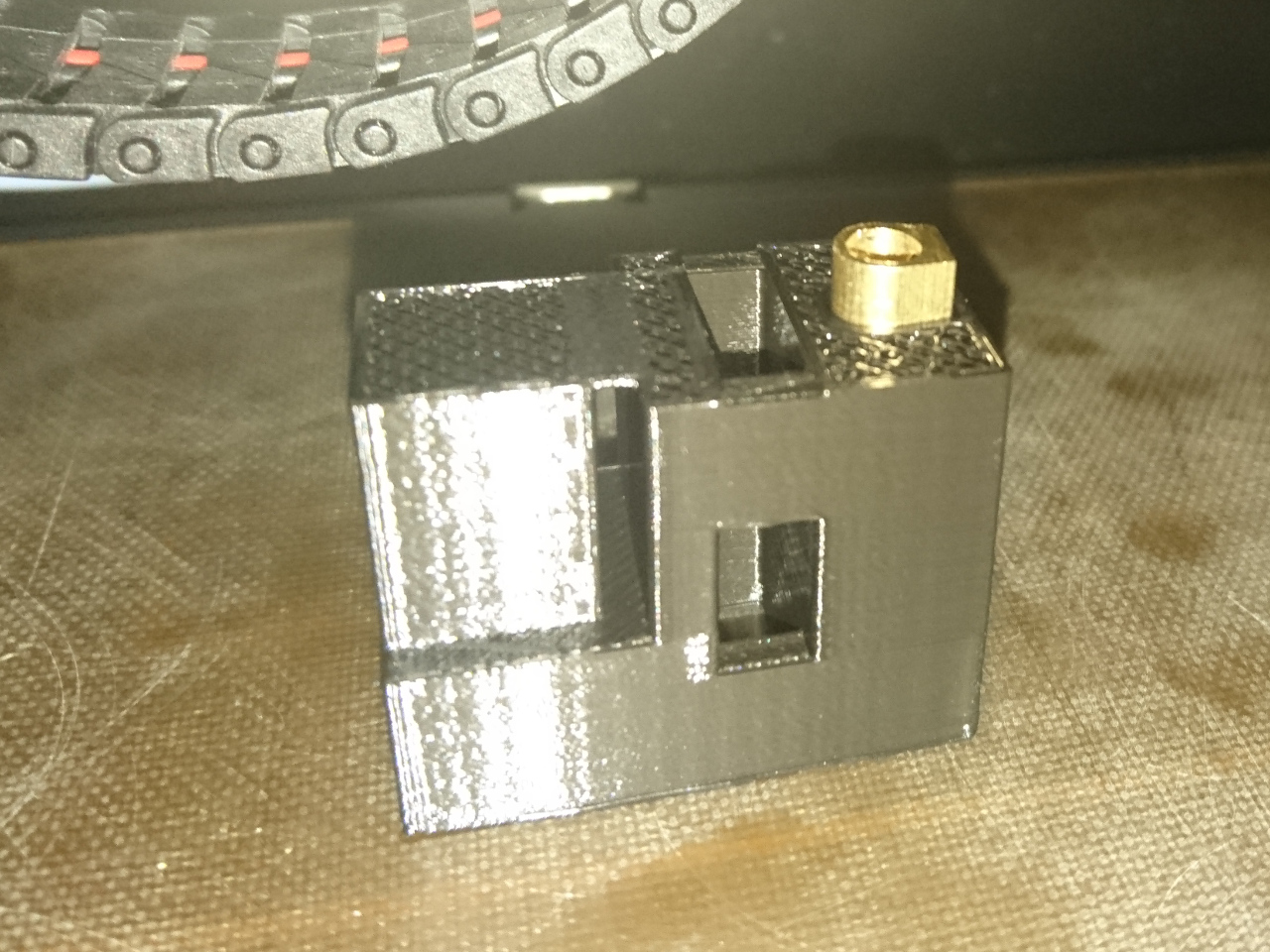

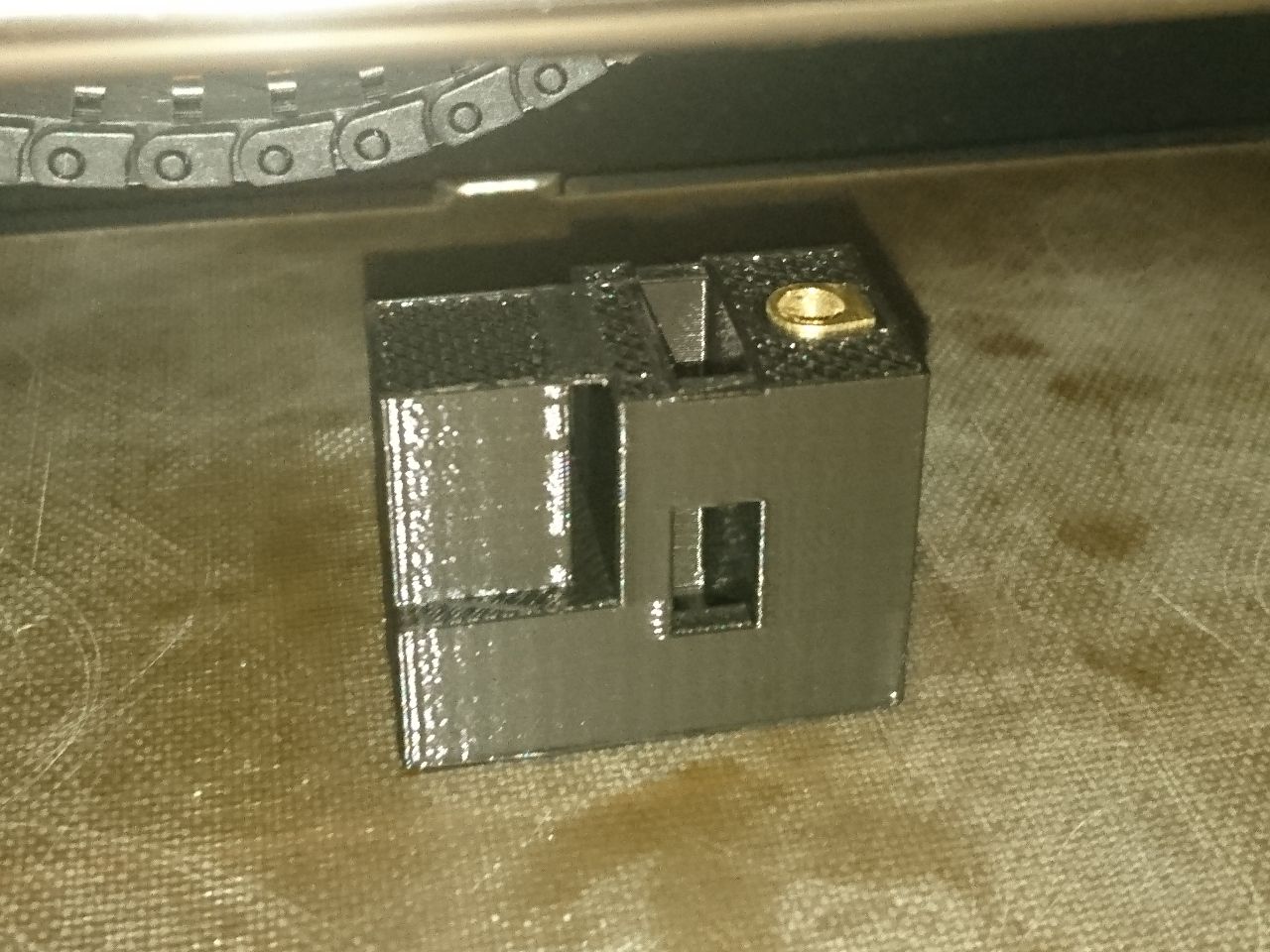

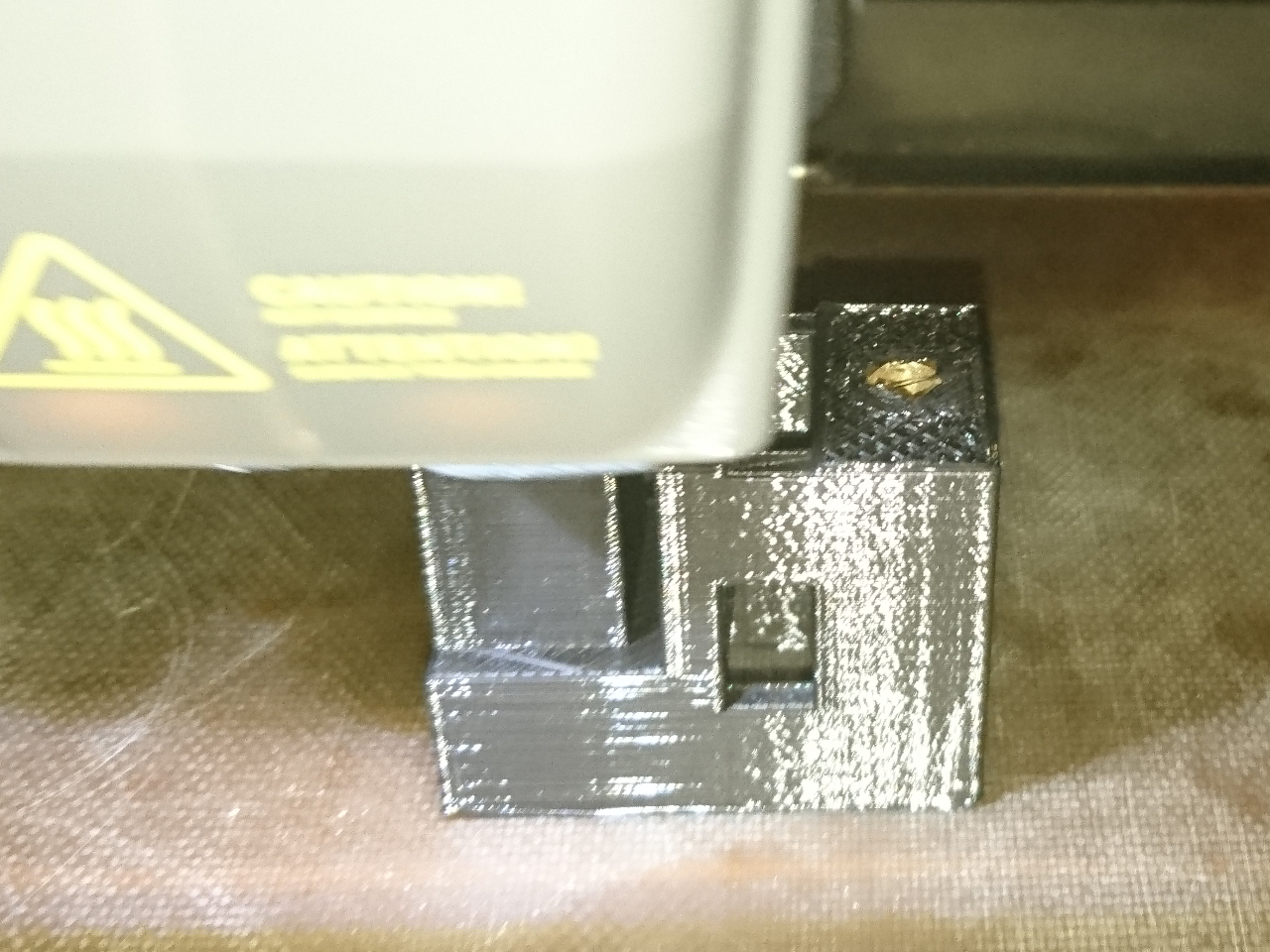

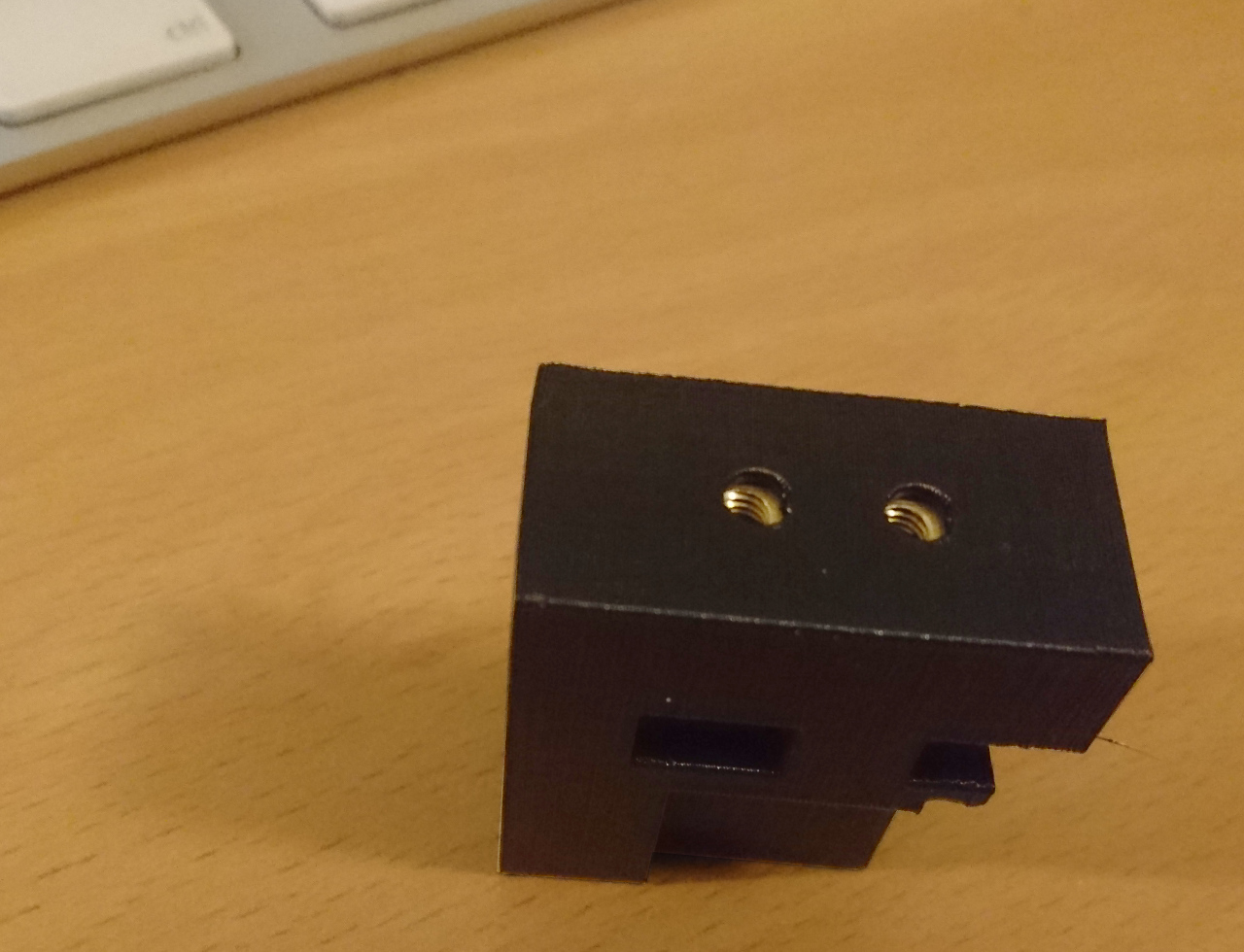

Black Pepper sponsored other rover last time (now completely upgraded) and gave us all the needed support this time (even pledging money or parts when needed). Black stress balls they passed over worked as holders for Somewhere Over the Rainbow balls. And we shouldn't forget the famous 'Rover Calibration Unit' they paid to be printed:

Black Pepper sponsored other rover last time (now completely upgraded) and gave us all the needed support this time (even pledging money or parts when needed). Black stress balls they passed over worked as holders for Somewhere Over the Rainbow balls. And we shouldn't forget the famous 'Rover Calibration Unit' they paid to be printed:

[gallery ids="1311,1310" type="rectangular"]

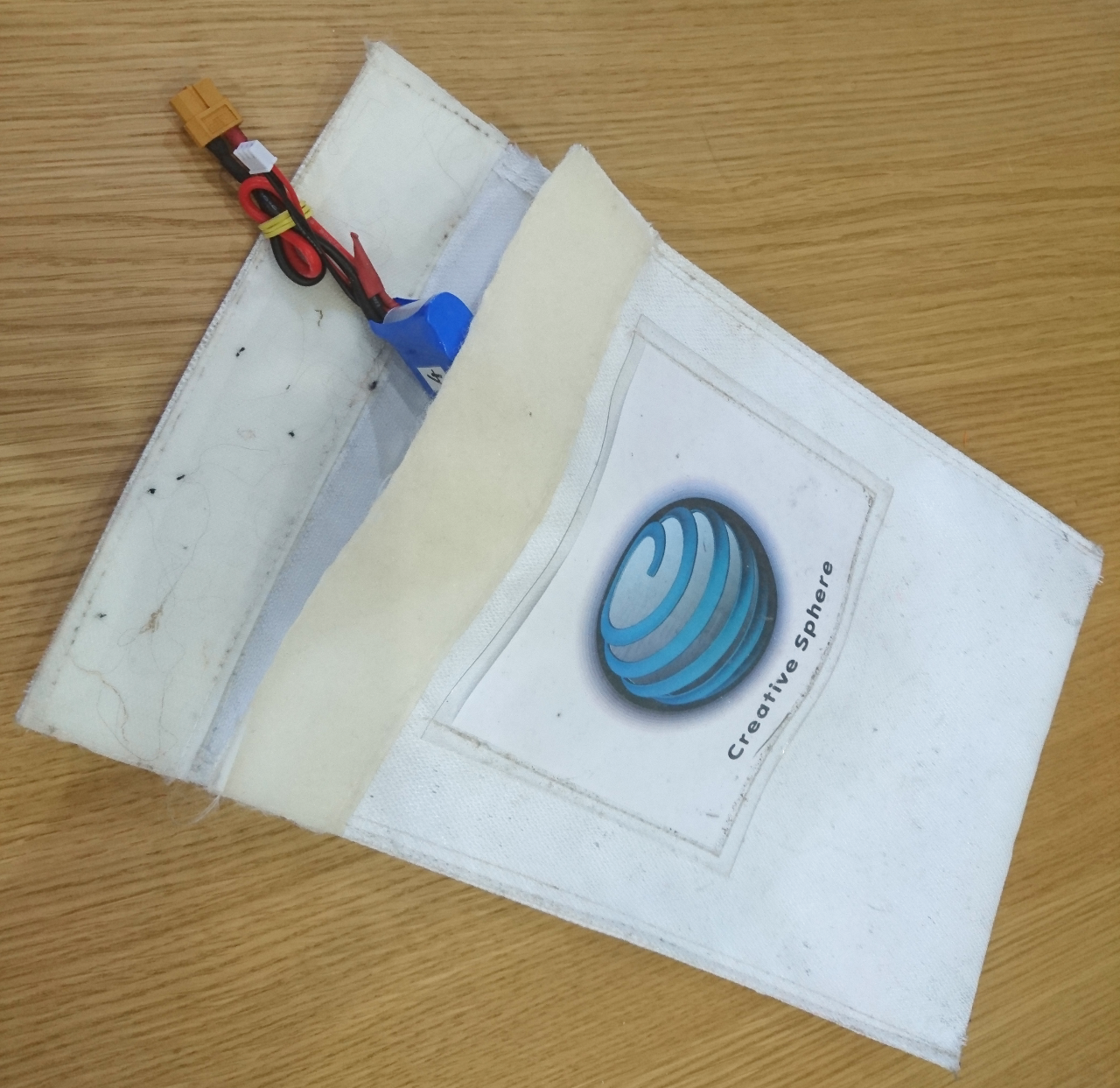

And least but not least, Creative Sphere funded 3D printing (filament and otherwise) and some small bits and pieces (for instance extra servos/ESCs, ATmega328p chip for ultrasonic sensors or upgrade of 9 axis sensor mpu9250).

Aside of those companies, we must mention some parents for their support - especially Mujeeb Parambath that supported us not only morally but materially donating money for another couple of distance sensors and PS3 bluetooth controller.

Lessons Learned

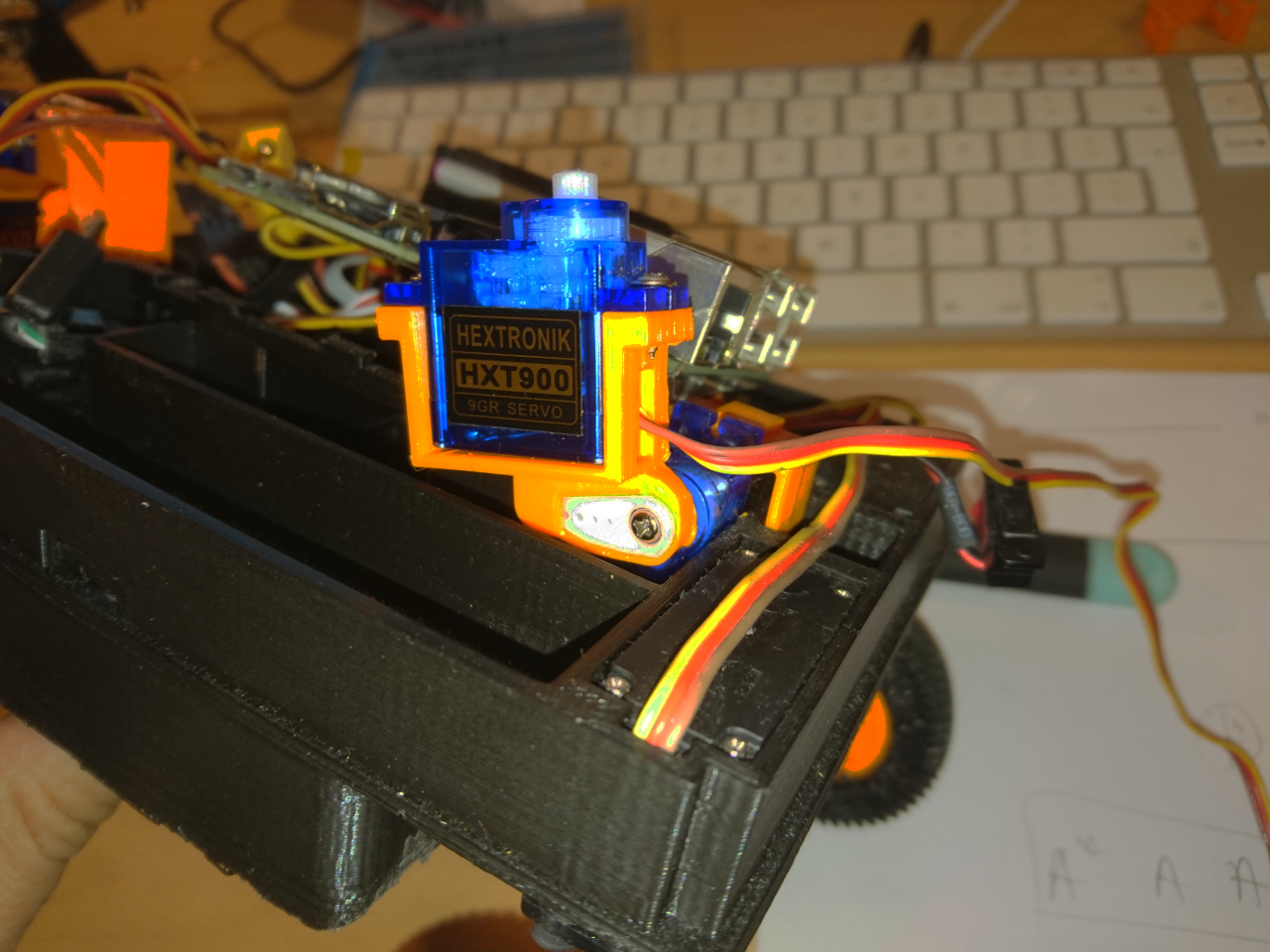

Don't leave the hard parts for last. Do computer vision sooner (because it is fun). Rewrite code more often (is it in Agile manifesto or close to it?). Start preparations before Christmas - not after.But not all lessons were on our expense. For instance - always have spare parts (read: servos) worked well as we have broken a couple in a process of practising for the PiNoon challenge. Have options open - we decided to stick with infrared ToF sensors over ultrasonic due to extra time we needed to make them work reliably in the first place.

See you all on Saturday!

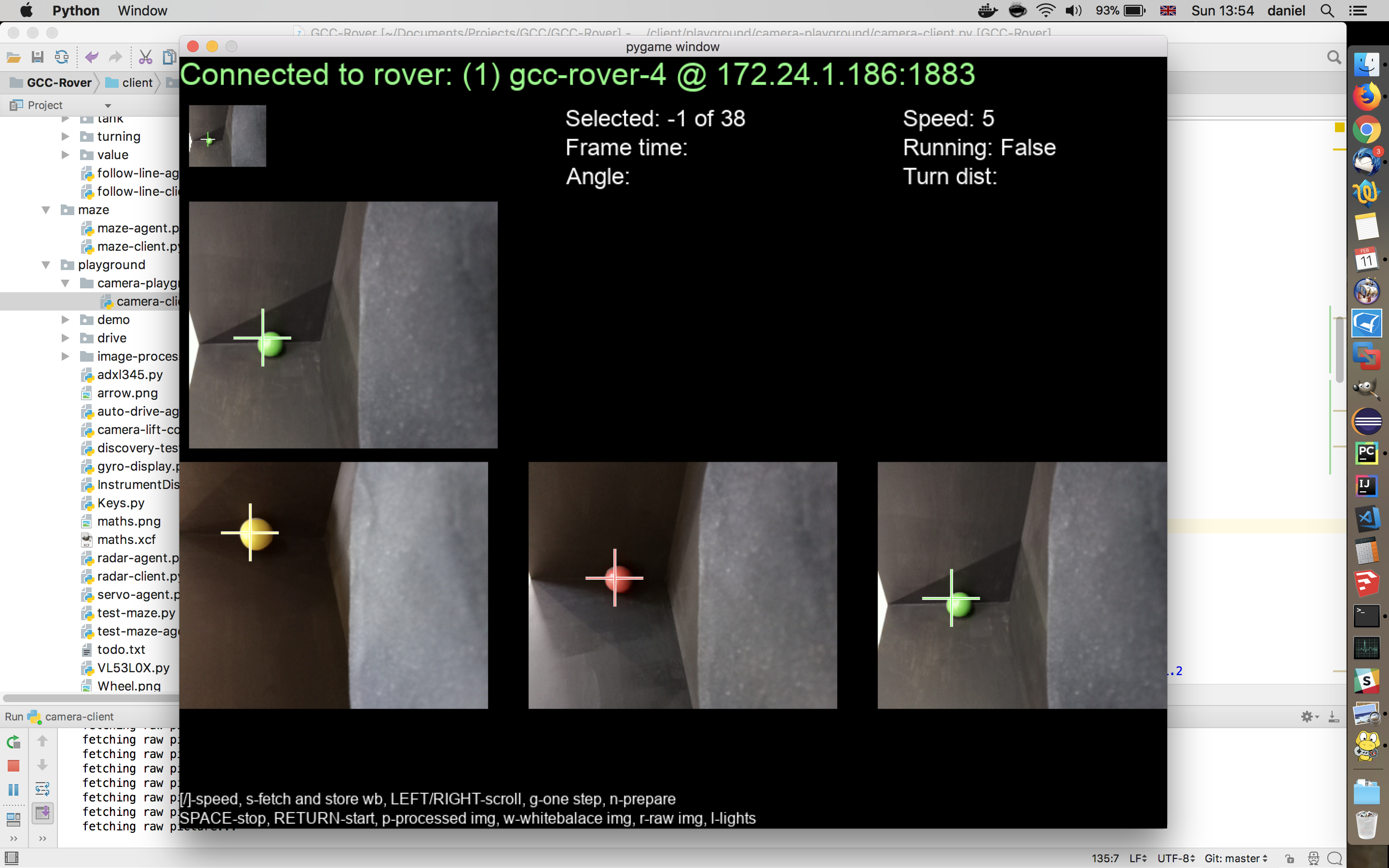

Left and right orientations are exactly what we needed this time.

Left and right orientations are exactly what we needed this time.

The left image is of the found contour, middle of mask applied to the hue channel (see above what hue was looking like as complete) and last image is the result... well, for looks!

The left image is of the found contour, middle of mask applied to the hue channel (see above what hue was looking like as complete) and last image is the result... well, for looks!

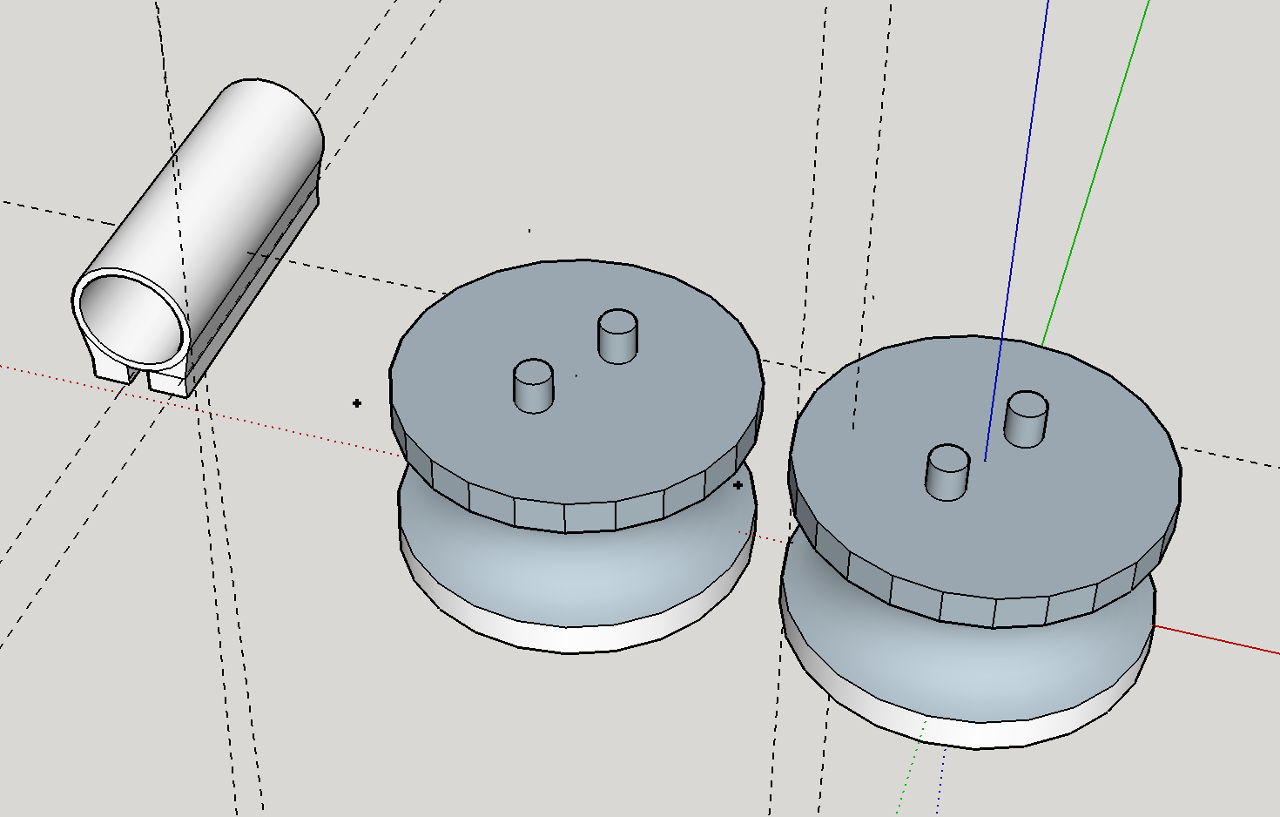

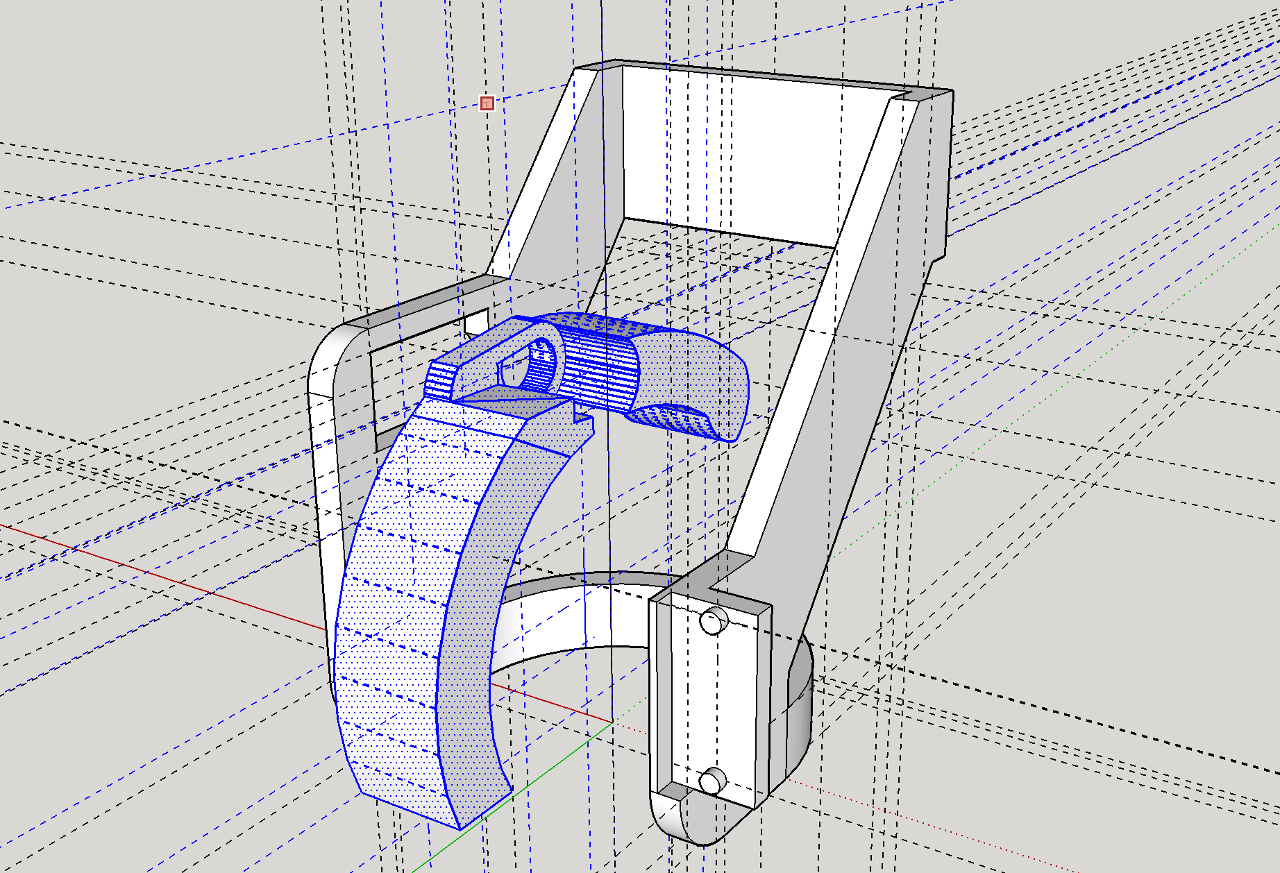

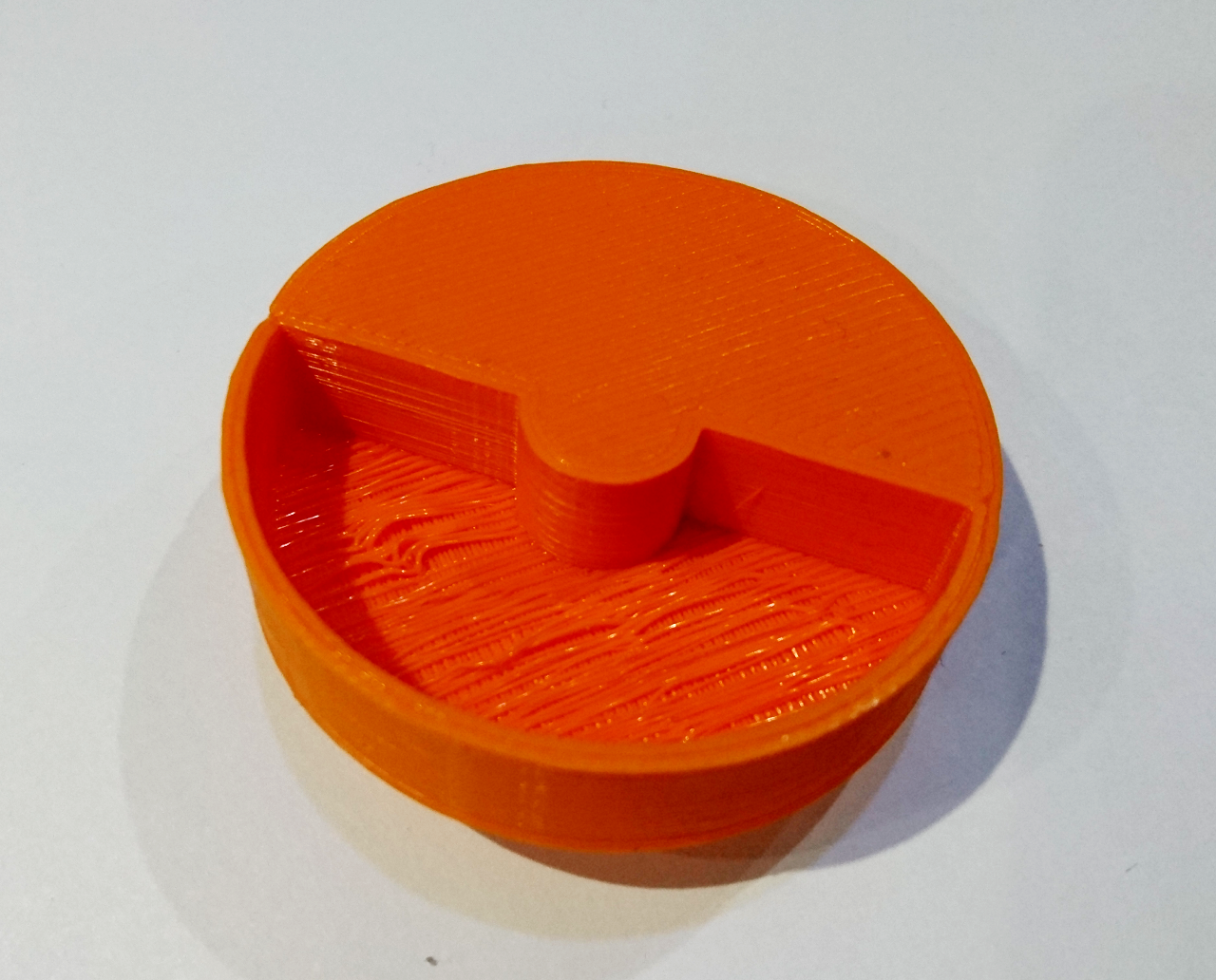

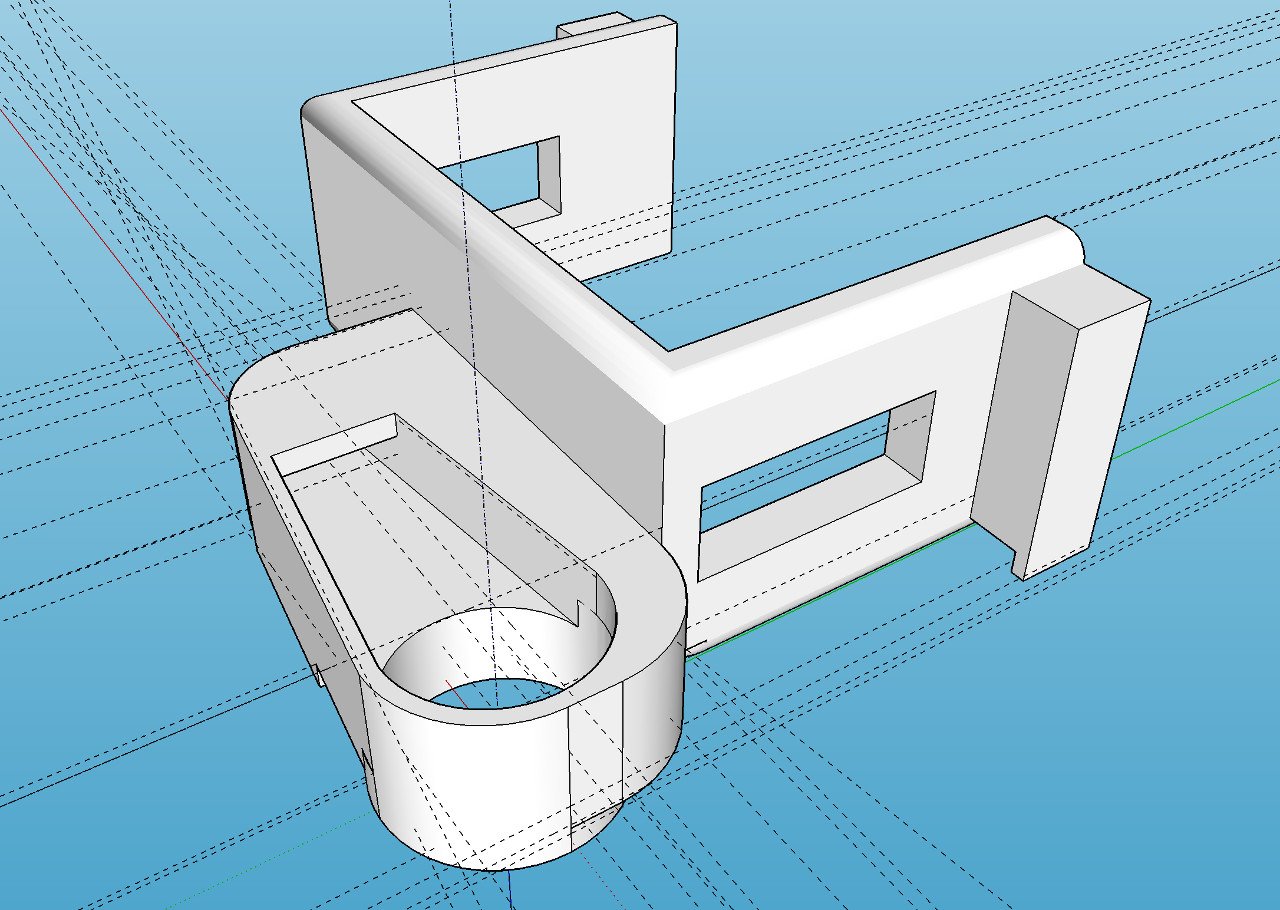

The nerf dart would be somehow delivered in between two spinning cylinders and would be then propelled forward through the barrel. We started with designing a concave cylinder in Sketchup first as it seemed to be hardest challenge of all. Fortunately it wasn't half as hard as we thought.

The nerf dart would be somehow delivered in between two spinning cylinders and would be then propelled forward through the barrel. We started with designing a concave cylinder in Sketchup first as it seemed to be hardest challenge of all. Fortunately it wasn't half as hard as we thought.

Still frame from the anime

Still frame from the anime  Still frame from the TV series

Still frame from the TV series

Also, it seemed that the best results others achieved were when they had some way of 'capturing' the ball.

Also, it seemed that the best results others achieved were when they had some way of 'capturing' the ball.

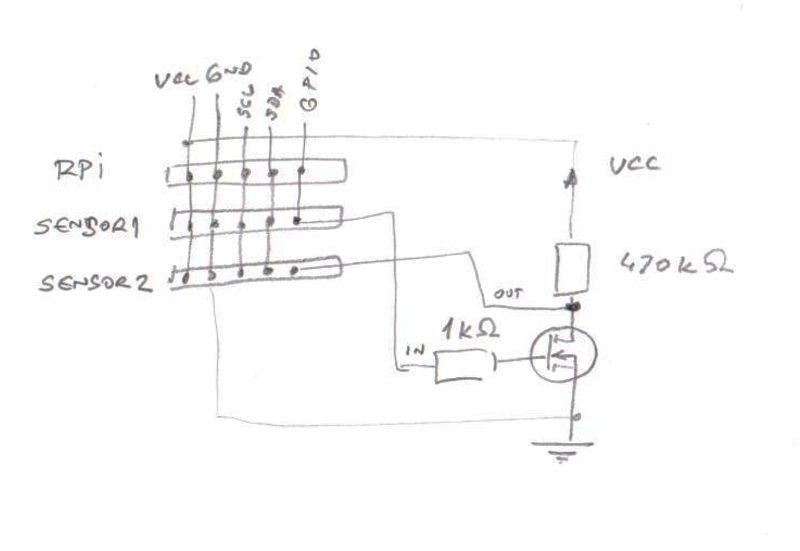

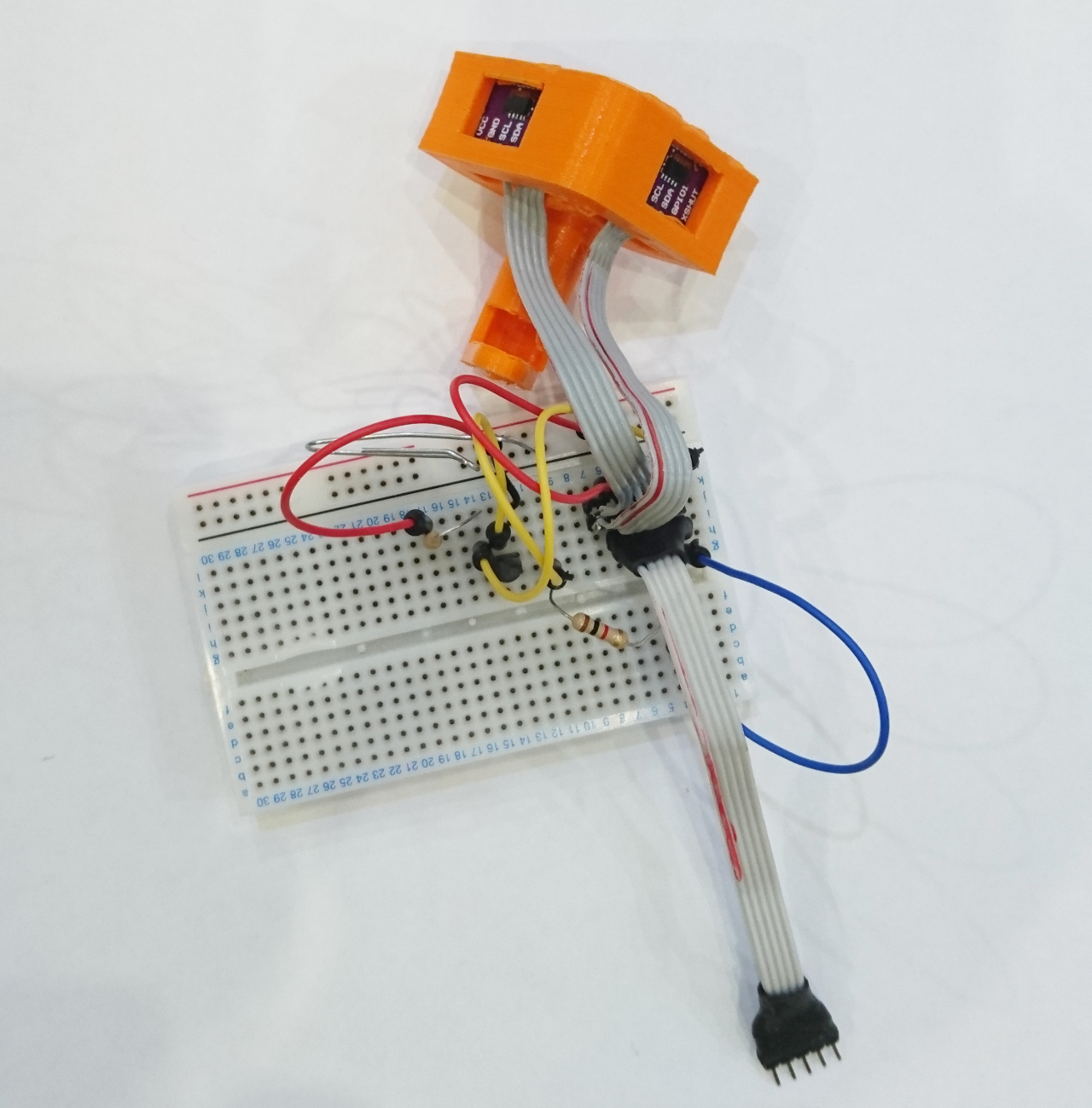

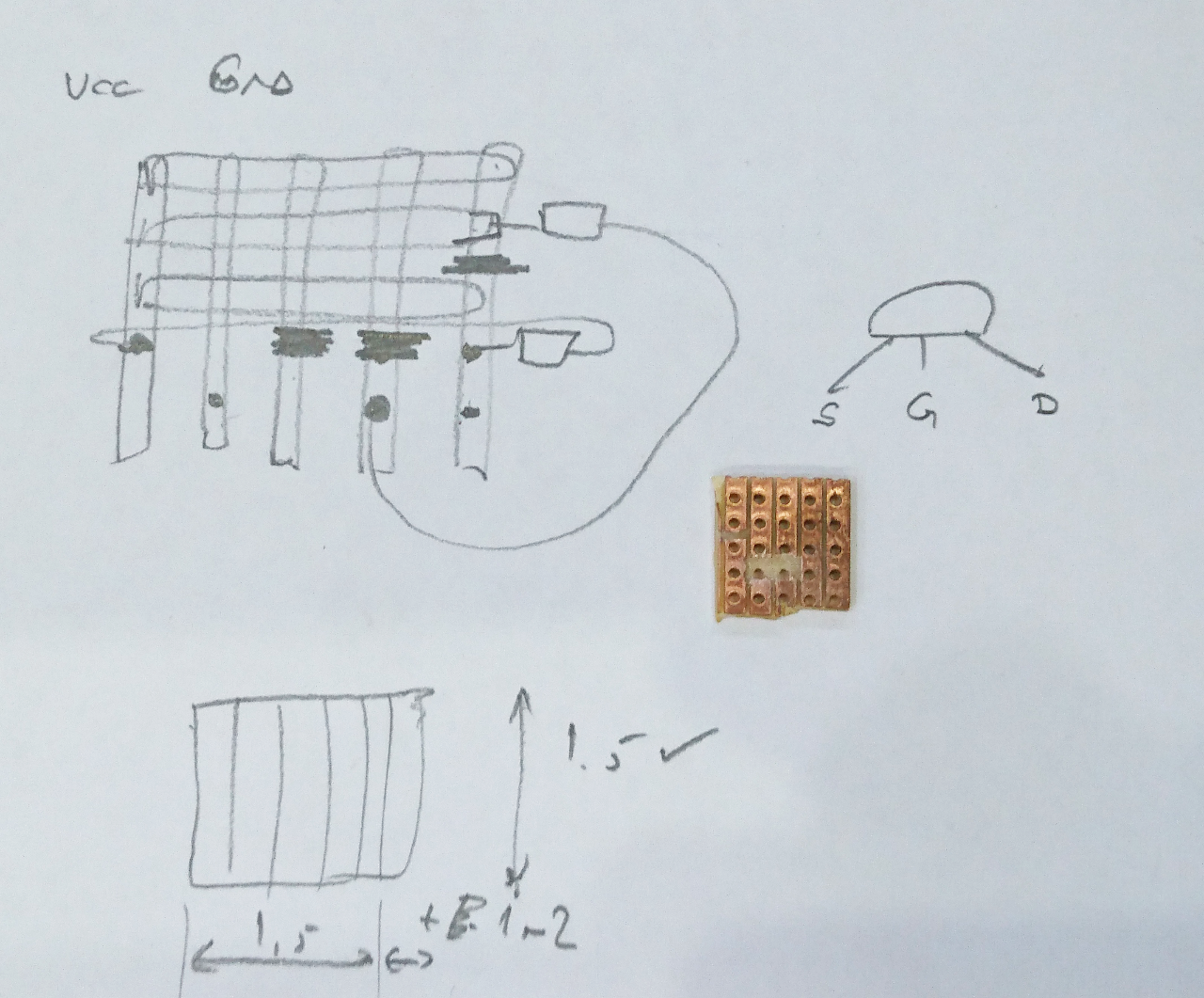

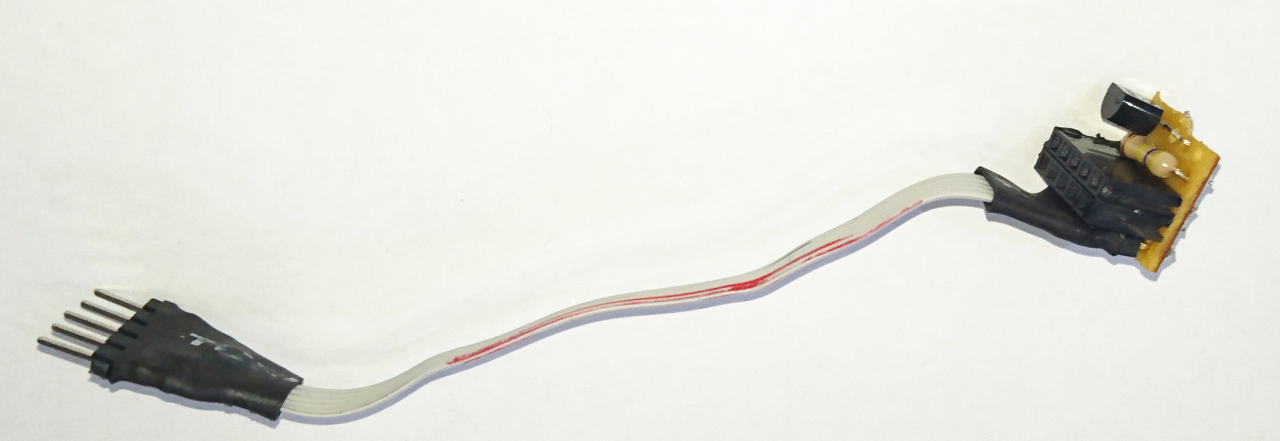

FET transistor 2n7000 (which, btw, I somehow had in box of spares) seemed to be ideal for the 'not' gate.

FET transistor 2n7000 (which, btw, I somehow had in box of spares) seemed to be ideal for the 'not' gate.

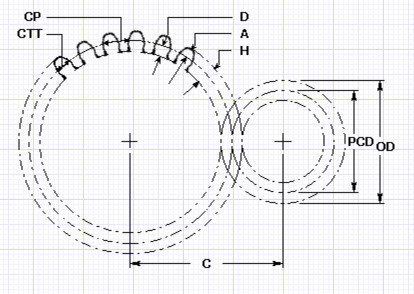

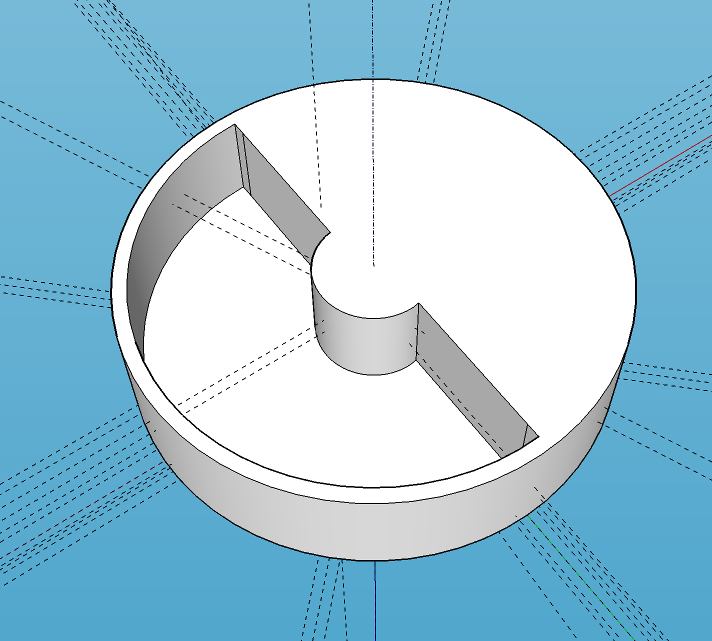

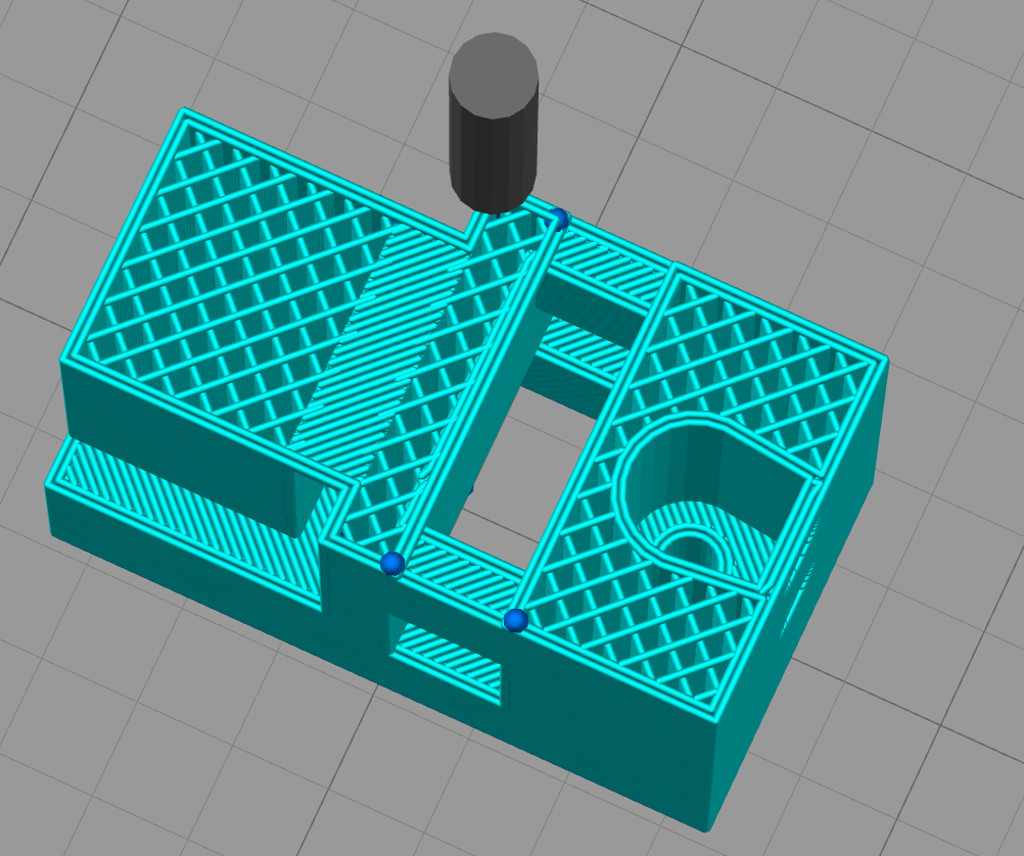

Next was a wheel to help us calibrate the (non load) speed of the motors. It is half filled in and half 10mm indented - an attempt to use same distance sensor for calibrating speed of the wheel. The idea is to put the sensor at some close range (10-20mm) and spin the wheel, counting how many times a second it measured the shorter distance to longer distance. Its target speed can be 120RPM, which is 2 times a second - and the default VL53L0X 'time allowance' is 33ms, we should be able to do 30 samples of which 15 should be shorter distance and 15 longer. The software for it is still pending.

Next was a wheel to help us calibrate the (non load) speed of the motors. It is half filled in and half 10mm indented - an attempt to use same distance sensor for calibrating speed of the wheel. The idea is to put the sensor at some close range (10-20mm) and spin the wheel, counting how many times a second it measured the shorter distance to longer distance. Its target speed can be 120RPM, which is 2 times a second - and the default VL53L0X 'time allowance' is 33ms, we should be able to do 30 samples of which 15 should be shorter distance and 15 longer. The software for it is still pending.

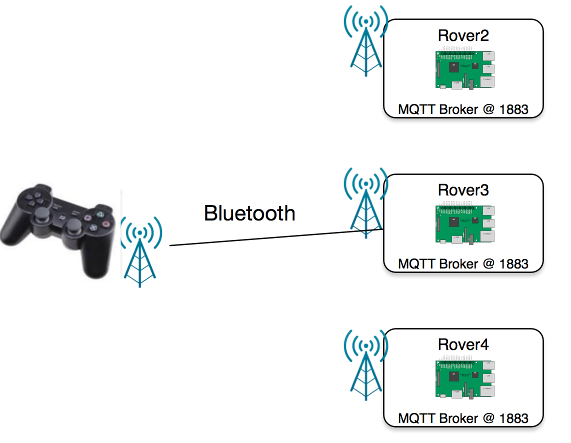

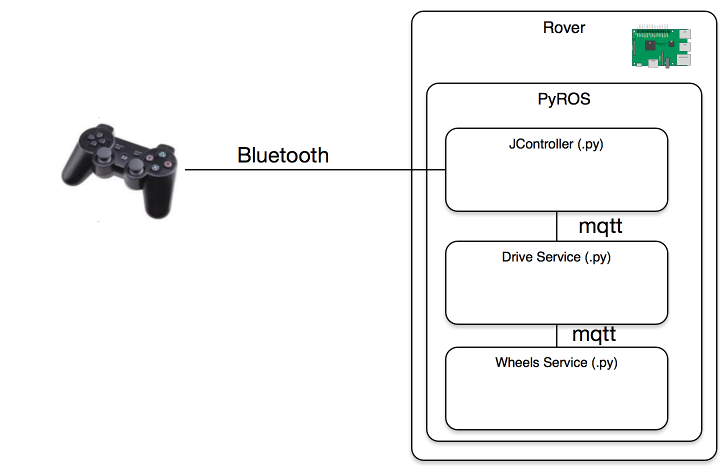

The way we will do this is with a (knockoff) PS3 controller, connected via Bluetooth to the rover. This is way better because there would be far less points in the packets route, and because its more direct, it should have a shorter travel time, meaning less delay. Also, there is no three way acknowledgement TCP robustness relies on. YAY!

The way we will do this is with a (knockoff) PS3 controller, connected via Bluetooth to the rover. This is way better because there would be far less points in the packets route, and because its more direct, it should have a shorter travel time, meaning less delay. Also, there is no three way acknowledgement TCP robustness relies on. YAY!

Guess what we will be doing on our next club meeting on Wednesday! :)

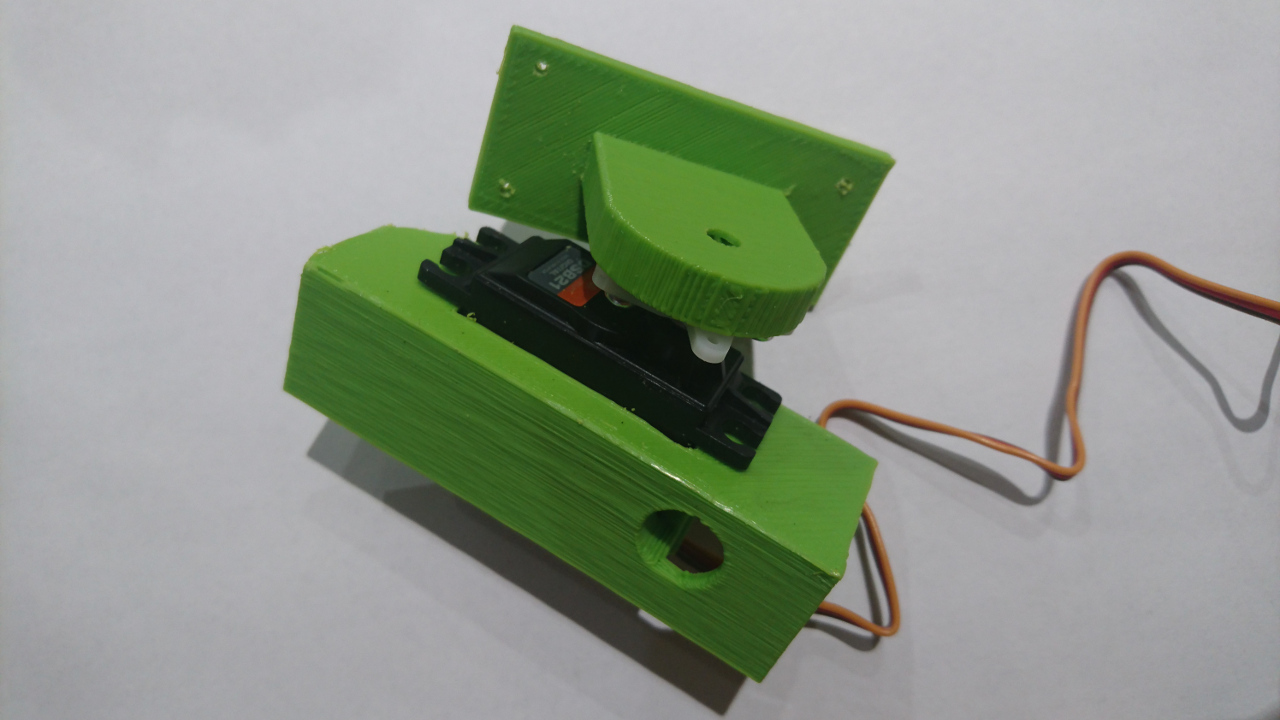

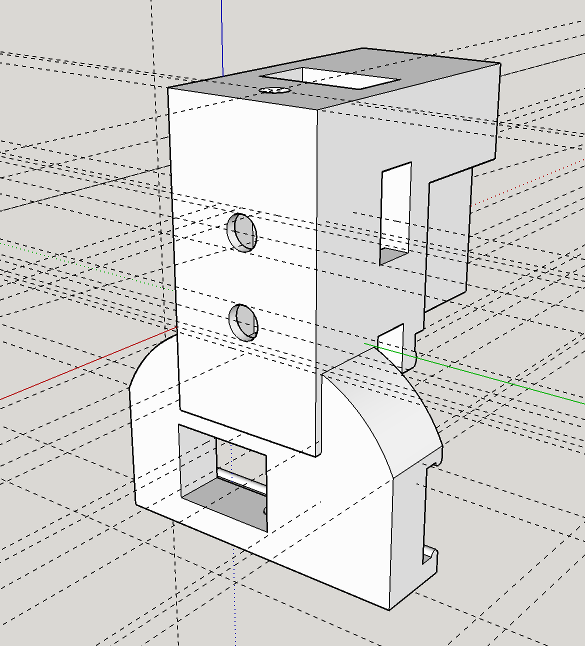

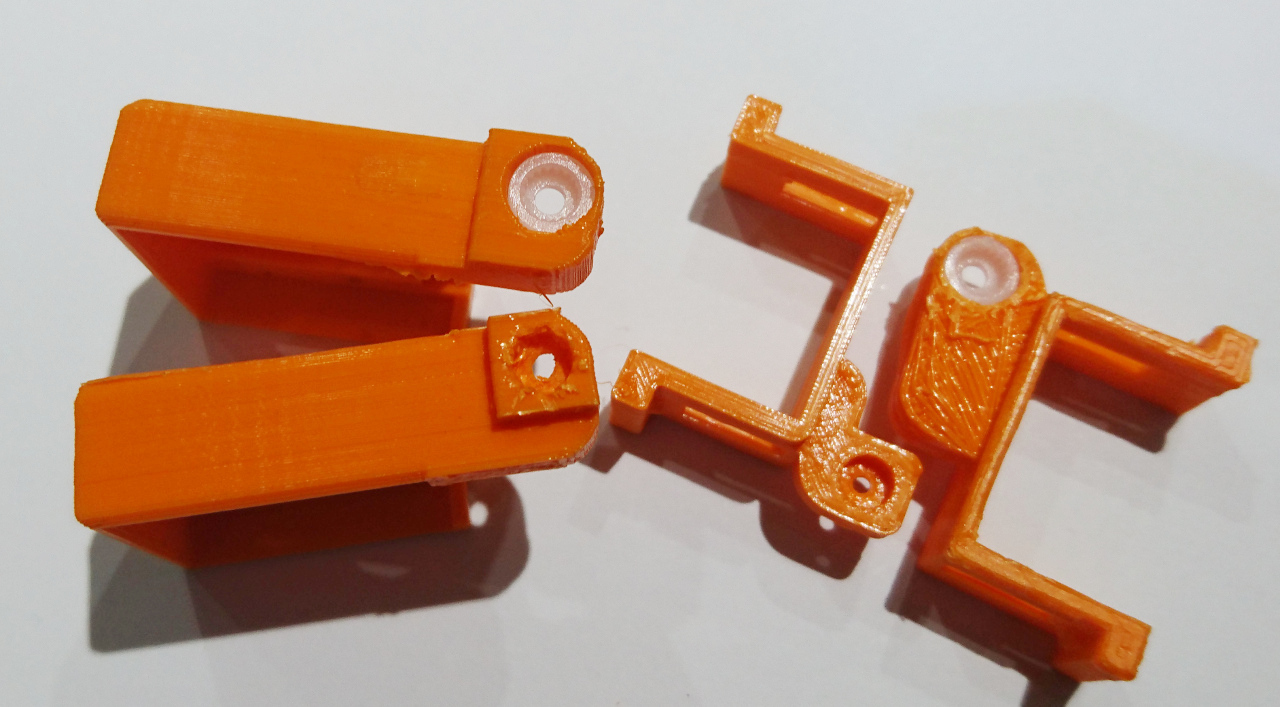

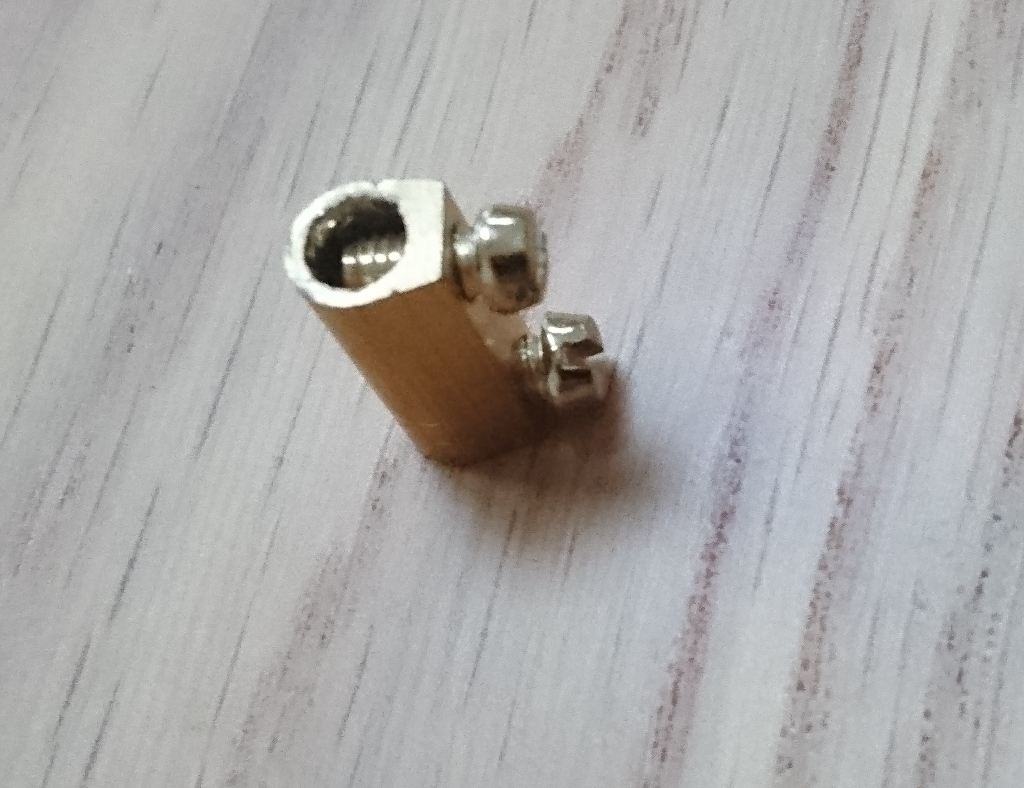

Guess what we will be doing on our next club meeting on Wednesday! :) One of such a design decision was the way camera arm is attached to the servos. Idea was that if appropriate retched hole is made, servo shaft would fit and hold. It did to the extend but whole connection was a bit flimsy and would easily slip. Calibrating camera servos and then having the arm slip on the servo shaft would cause even more damage (or add to slipping, rounding the hole even more). The solution for this is to incorporate the original servo arms to the 3D printer parts. The result is here:

One of such a design decision was the way camera arm is attached to the servos. Idea was that if appropriate retched hole is made, servo shaft would fit and hold. It did to the extend but whole connection was a bit flimsy and would easily slip. Calibrating camera servos and then having the arm slip on the servo shaft would cause even more damage (or add to slipping, rounding the hole even more). The solution for this is to incorporate the original servo arms to the 3D printer parts. The result is here:

Now we have finally our 'secret' weapon ready to be deployed:

Now we have finally our 'secret' weapon ready to be deployed:

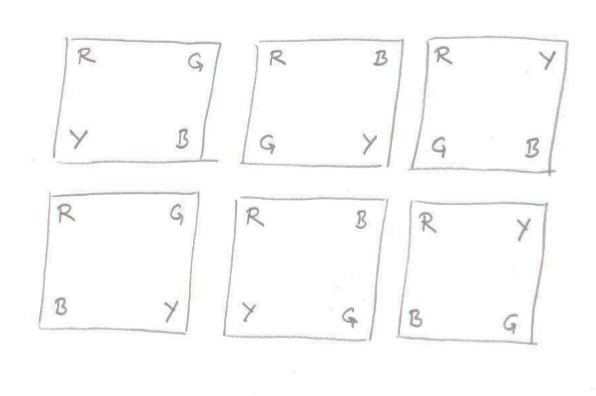

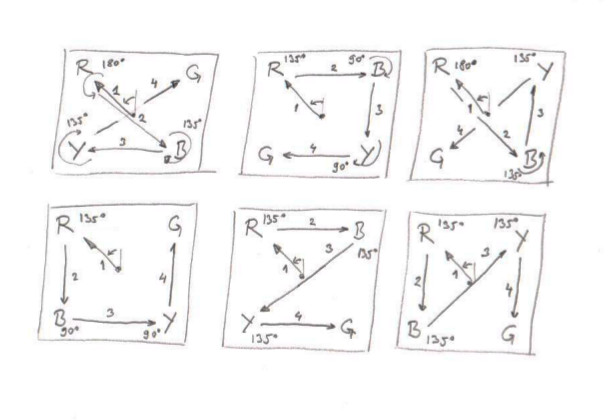

It was supposed to be used for follow the line (never worked as planned). Our first go will be in adopting existing software which captures images from the camera and scales them to 80x64 pixels and moves them to numpy for processing. The idea is to use as simple code as possible for detecting the presence and position of red, blue, yellow and green on the picture. Also, the code should be as modular as possible so we can, given enough time, later switch to use more advanced software like OpenCV.

It was supposed to be used for follow the line (never worked as planned). Our first go will be in adopting existing software which captures images from the camera and scales them to 80x64 pixels and moves them to numpy for processing. The idea is to use as simple code as possible for detecting the presence and position of red, blue, yellow and green on the picture. Also, the code should be as modular as possible so we can, given enough time, later switch to use more advanced software like OpenCV.